Checking and measuring software artifacts

Analytical quality assurance offers a cost- and resource-saving way to check software artifacts - for example, requirements, UML diagrams, source code, and test cases - according to predefined rules and to measure them according to criteria such as complexity, quality, and quantity. By using analytical quality assurance, errors can thus be found and corrected at an early stage.

Approaches to software quality assurance

The following five approaches can be used to ensure the quality of software (cf. 1):

- Psychological

- constructive

- analytical

- empirical

- retrospective

The psychological approach includes all measures to influence people to work better in terms of quality, i.e. measures such as rewards, punishments, promotions and appeals to work ethics. The constructive approach includes all measures to improve working conditions - such as the provision of better work equipment - tools, languages, techniques, processes, etc. - and the provision of the most modern workplaces possible - premises, communications infrastructure, tools, social network and the like.

The analytical approach includes all measures for controlling those software artifacts that are produced by the software workers - checking and measuring the documents, models, source code texts and test cases - with the aim of identifying quality deficiencies and deviations from the target and drawing the attention of the authors of the artifacts to them.

The empirical approach includes all testing measures such as unit testing, integration testing, system testing and acceptance testing. Here the behavior of the finished software is observed in a test environment under controlled conditions, in order to recognize deviations from the target behavior.

To the subsequent approach all measures belong, which serve to increase the quality of the software artifacts after their completion subsequently - measures such as Re-Engineering, Refactoring and Re-Implementation. Contrary to the removal of individual lack and errors by the developer of the software in the context of the development, it concerns here purposeful actions, related to a whole system or a subsystem with the goal of increasing the quality of that system.

In this context, it is assumed that the degree of quality improvement can be measured.In this paper, we address the third, the analytical approach. The other four approaches are equally important and should be pursued in parallel with this one, but each of them contains enough material for a book of its own.

The difference between defects and errors

Before going into the analytical measures, it is first necessary to distinguish between the two terms "deficiencies" and "defects" (Deficiencies and Defects, cf. 2). A defect is a violation of a quality rule, a deviation from the norm. The rule could be that code modules should not be nested more than three times or that each input parameter should be checked for plausibility.

A defect will not necessarily affect the behavior of a software product. There are already defects that may very well lead to an error, e.g. if exception handling or safety checks are missing in the code or if use cases are not fully specified. However, most types of defects will only affect the maintenance and further development of the software, e.g. if the code modules are too tightly coupled or if arbitrary data names are used. Any software artifact is subject to the laws of software evolution. In order to retain their value and continue to be useful, they must be constantly developed. This further development incurs costs. If you want to minimize these costs, you must ensure that the software becomes and remains capable of further development. The two goals of analytical quality assurance follow from this:

- Avoid behavioural errors

- Reduce development costs

Errors are - in contrast to defects - problems with the behavior of the software. That is, the software behaves differently than expected. Either the software terminates or it produces incorrect results. Such misbehavior can only be detected when the software is executed. That is why testing is called the empirical approach to quality assurance. The software is executed to empirically examine its behavior. This always involves a comparison. The actual behavior is compared with the target behavior and any deviation is first considered as an error.

Errors are caused by deficiencies in the code or in the design - so-called defects. However, it is also possible that the target specification or expectation is incorrect. In this case, it is not the code that is defective, but the target or expectation. In any case, there is a discrepancy between the target and the actual and this must be eliminated. The purpose of empirical quality assurance is to uncover such discrepancies, also with regard to the performance and resilience of the software. Testing is unavoidable, but is a topic in itself and will not be discussed further here.

Analytical quality assurance = testing + measuring

In theory, analytical quality assurance is a broad, almost limitless field. In practice, it can be reduced to a few automatic checks. Software usually consists of four types of objects on four semantic levels:

- Requirements documents at the specification level

- Design models (UML/ER) at the design level

- Source code at the code level

- Test cases at the test level

Requirements documents and source code are both texts. Behind the UML models are structured data in the form of XML documents, in which the design elements and their relationships are recorded. The UML diagrams are mapped from the XML texts and vice versa. Test cases are also converted into texts, namely test scripts that are interpreted or compiled to generate test data and validate test results. Initially, however, the test cases are usually stored in tables with rows and columns containing the test case attributes.

Analytical quality assurance is about testing and measuring these four types of text. The various testing and measurement measures are summarized here under the generic term software audit. In the past, they were performed manually under names such as "design reviews", "code inspections", and "test audits" (cf. 3). The author Sneed was himself responsible for the inspection of structure diagrams and chill programs in the Siemens EWSD project (Electronic Dialing System Digital) in the late 1970s. It took him about half a day to inspect just one module. The code inspection alone dragged on for more than a year. Today - in the age of agile development - no one can afford that. Analytical quality assurance, like empirical quality assurance or testing, must be automated. The test and measurement objects are retrieved from the configuration management system and immediately fed to the appropriate tool (see Figure 1).

Review and measurement of requirements documents

Requirements documents are checked and measured with a tool for automatic natural language analysis. The texts must be marked beforehand either with XML tags or with keywords. In order to keep the document easier to write for the end user, keywords are recommended, because it is better to include those words or tags in the text right from the beginning, where they can also serve as way markers. An example of such keywords in German is the list in Table 1.

| Type | Designation |

|---|---|

ACT |

Actor |

CASE |

Use Case |

INPT |

Enter |

MASK |

GUI |

OBJT |

GO |

OUTP |

Output |

PATH |

Main path |

POST |

Postcondition |

PRE |

Precondition |

PROC |

Processed |

REFS |

Implements |

USES |

Used |

REQU |

FUNC-REQ |

REQU |

NF-REQ |

RULE |

GR |

TRIG |

Trigger |

These keywords - Keywords in Context - are used to identify the text elements. In addition to the individual requirements, business rules, business objects, actors, interfaces, interfaces and use cases are also identified. A semiformal requirements text should first document the project-wide elements such as business objects and business rules. Then come the project-specific requirements, functional and non-functional. This is followed by the system actors, system interfaces and interface patterns. Only at the end come the use cases as a bridge to the system design. Thus, the use cases can refer to the requirements they fulfill, the business rules they implement, the objects they process, the interfaces they serve, and the actors they are triggered by.

Case study "Calendar function

The following example serves to describe a simple requirement specification of a calendar function.

Functional requirements

FUNC-REQ-01(weekday determination): The user enters an eight-digit date with year, month and day and gets back the weekday.FUNC-REQ-02(Multilingual): The day of the week can be returned in German, French or Italian.FUNC-REQ-03(Alignment): The text of the day of the week can be aligned left or right.FUNC-REQ-04(Error handling): If it is not possible to convert the date to a weekday, question marks "??????" should appear in the response field.

Non-functional requirements

NF-REQ-01(Antwortzeit): Die Antwortzeit bei der Kundenabfrage sollte <= 1 Sekunde und die Antwortzeit bei Kundenaufträgen <= 3 Sekunden sein.NF-REQ-02(Load capacity): The service must be able to process at least 500 orders per hour without loss of performance.NF-REQ-03Availability: The service must be available 24 hours a day, seven days a week, at least 95 percent of the time.

Business properties

GO-01: CalendarGO-02: DatesGO-03: Weekdays

Business rain

GR-01: (implausible date handling): If a date is implausible, the day of the week is filled with "?".GR-02: Calendar starting date: The calendar assumes that January 1, 1901 was a Tuesday.GR-03: Language Codes: The following language codes apply in the company: German = "1" , French = "2" , Italian = "3", English = "4".

Use case

The text analysis tool checks if all mandatory properties and references are present and if they are consistent, e.g.:

- Use case does not meet any requirement.

- Use case refers to undefined requirement.

- Requirement is not met by any use case.

- Business rule is not implemented.

- Business object is not referenced.

In addition, the text analyzer checks whether all requirement sentences are formulated correctly. Chris Rupp and her colleagues at Sophist GmbH have defined rules or test specifications for formulating requirement sentences - the so-called "Sophist rules" (cf. 4). According to these rules, for example, each requirement should be clearly identifiable, assignable and interpretable. Each request sentence should have subject, object, and predicate, and should avoid nominalizations (i.e., the grouping of multiple actions into one substantival verb). Representative of these rule violations include:

- Actions are passively formulated and not active.

- A state refers to multiple objects, not just one.

- An abstract state is not uniquely defined.

- A statement implies an implicit assumption.

The SoftAudit tool can check compliance with such rules sentence by sentence. Finally, the analyzer can count the text elements and build a requirements metric. The requirements, business rules, object references, use cases, and processing steps in the use cases are counted, among others. In addition, function points, DataPoints, and use case points are also counted. The goal is to measure the size, complexity, and quality of the requirements documents.

| Attribute | Description |

|---|---|

| Designation | Weekday determination |

| Fulfills | Func-Req-01, Func-Req-02,Func-Req-03, Func-Req-04. |

| Implements | GR-01, GR-02, GR-03, GR-04, GR-05, GR-06, GR-07. |

| Functions | FUNK-01. |

| Received | REQUEST-01. |

| Send | RESPONSE-01. |

| Processed | GO-01, GO-02, GO-03. |

| Trigger | Message_from_client |

| Stakeholders | ClientProgram |

| Preconditions | Client must be authorized. Date must be valid. |

| Postcondition | If fulfilled, day of the week in German, French or Italian. If day of the week is not fulfilled = ???????. |

| Main path | 1) Client sends message with date. 2) Service checks date. 3) If date is valid, service searches weekday in weekday table. 4) If language code is 1, service fetches German weekday. 5) If language code is 2, Service retrieves French day of week. 6) If the language code is 3, the service will get the Italian day of the week. 7) Service returns the selected weekday. |

| Byway | 8) Service returns ?????? back. |

| Exceptions | Service rejects order if client is not authorized. |

| Inherits | Standard date function. |

| Used | Date check |

| Enhanced | Client calendar |

| Comments | This service is only valid for a date since 1900. |

Testing and measurement of the UML model

For auditing and measuring the design model, the SoftAudit tool uses an XML parser that parses the XML schema behind the UML diagrams. When parsing the XML schema, the model types such as classes, objects, methods, attributes, parameters, activities and use cases are detected and counted. At the same time, the relationship types such as association, inheritance, usage, and inclusion are detected and evaluated. Model complexity results from the ratio of model relationships to model elements. The more relationships there are - relative to the number of elements - the higher the complexity of the model:

The model size comes from the number of model elements. The model quality is the ratio of the actual to the target. For example, each class should have only a limited number of dependencies to other classes (coupling degree) and a limited number of attributes relative to the number of methods (cohesion degree). Each class should have at least one attribute and two methods. Sequence diagrams may only contain references to classes that are already defined in a class diagram. In the activity diagrams, only steps of a specified use case may occur, which in turn are defined as methods in a class diagram. In this way, the consistency of the model is checked (cf. 5).

At this point, the first cross-checking beyond semantic levels also follows. It can be checked whether all business objects in the requirements documentation also appear as objects in the object model and whether all use cases specified in the requirements document appear in the use case diagrams. This is where you can check the consistency between the requirements document and the design model. Typical deficiencies in the design model are:

- The class is referenced but not defined ("Class referenced is not defined").

- The entity name does not conform to naming rules.

- The maximum number of parameters allowed to a method is exceeded ("Maximum Number of Parameters to a Method is exceeded").

In turn, the analysis of the UML model produces two reports: a deficiency report of the discrepancies between target and actual and a measurement report with the design metrics (size, complexity and quality).

Checking and measuring the code

The source code is what is checked and measured the most. For this purpose, there are already quite a few tools such as "Software Sonar", "FxCop" (.NET) and "PMD" (Java), which check no less than 180 different coding rules. Also the tool "SoftAudit" checks source code in twelve different languages from IBM Assembler to Java and PHP. Some code rules depend on the programming language, such as:

Equal Operatoris to be used to compare objects.- Classes should be defined with final.

- Methods may only be called via a defined interface.

Other rules apply to a particular class of languages, object-oriented languages for example:

- Class attributes should never be used as

publicdeclared. - Data casting should be avoided.

- Methods should not be nested more than four times.

- Nested classes are to be avoided.

- Each call to a foreign method should be embedded in a try-catch block.

Then there are rules that are universal and apply to all languages, such as:

- Conditions should not have more than three clauses.

- Text literals must not occur in procedural code.

- Multidimensional arrays are to be avoided.

Such rules serve a threefold purpose (cf. 6):

- Make the code consistent.

- Make the code more change-friendly.

- Making the code more secure.

Experienced developers will always question individual rules - and that's a good thing. In doing so, they provide an impetus to reflect on the existing rules and to question their relevance and meaningfulness. In the end, however, a development team must agree on which rules should apply to it.

Besides such elementary statement controls, there are also rules for the code modules in their entirety. These are rules to restrict the size of the code modules, the number of encapsulated data attributes, and the number of external relationships. These rules promote modularity and code reusability. Other rules of this type include rules for limiting the number of parameters in an interface and the width of views of a database. Such rules promote testability. Rules for commenting and indenting nested lines of code and for limiting line lengths promote understandability.

Coding rules, then, are not there to bully developers, but to make it easier for developers to work together, just as traffic rules are there to order traffic on the road. By statically auditing code and detecting rule violations, many potential problems are avoided. Most importantly, it prevents technical debt from spiraling out of control. Robert C. Martin, in his book Clean Code, emphasizes the importance of static code analyzers in maintaining code quality (cf. 7).

Hand in hand with the inspection of the code goes the measurement of it. Statements, statement types, data, data references, objects, and code blocks are counted. Metrics are then calculated from these counts:

- Size metrics such as function points, data points, and test points.

- Complexity metrics such as cyclomatic, linguistic, and referential complexity.

- Quality metrics such as cohesion and coupling, reusability, security, testability, and maintainability.

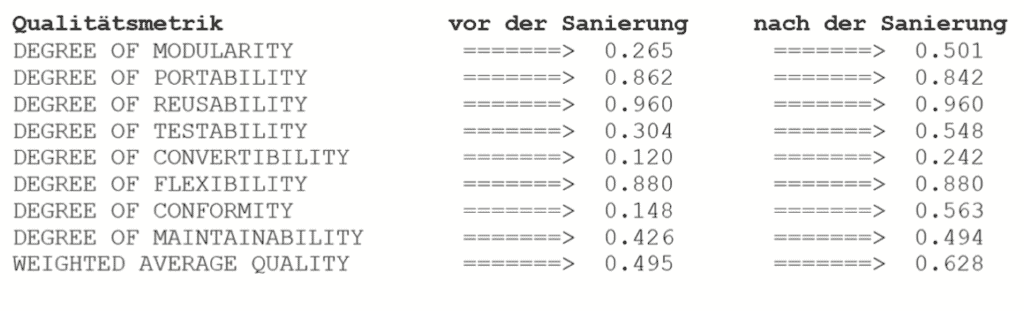

The degree of code quality should of course be as high as possible on the rational scale from 0 to 1. The degree of complexity, in turn, should be as low as possible. These measurement values are important clues for evaluating the software as a whole and provide guidance for the refactoring measures. When it comes to improving the quality of the code through refactoring measures, the quality of the code before and after the improvement measures must be compared. This requires a quantification of the quality.

Test and measurement of test cases

For the longest time, testware was neglected, even though test cases are essential to any software system. No one thought of testing them or even measuring them. Testware was never considered part of the actual software product. It is delivered to customers only in exceptional cases. But this argument also applies to the requirements specification and the design model. It has now been realized that their quality must also be assured - and this applies equally to the testware.

Testware refers to the test procedures, test cases, and test scripts that are used to test the software system and its components. Test scripts are like program code. They have a formal syntax and can be parsed like code. There are also rules for how they should be laid out. The problem here, as with requirements, is that there is no authoritative language standard. Each test tool vendor offers its own scripting language. Nonetheless, anyone who wants to can write a tool to test and measure test scripts, and for some types of scripts there are ready-made analysis tools such as for the WebService test script.

if (operation = "getWeekDay");if (response = "getWeekDay1Response");assert out.$ResponseTime < "1100";if (object = "return");assert out.P1_TT = "16":assert out.P1_MM = "10";assert out.P1_CE = "18";assert out.P1_JJ = {81:89};assert out.LANG_CODE = {1:3};assert out.DIRECTION = "L";assert out.DAY_NAME = "Mittwoch";assert out.RETURN_CODE = {1:3};endObject;endResponse;endOperation;

SoftAudit requires that test cases are stored in an Excel spreadsheet or a relational database table where each column contains a specific attribute of the test case. It is up to the user to define the names and types of test case attributes and record them in a parameter list. The testing tool uses this parameter list to edit the test case table. From this, it checks whether all mandatory attributes are specified, whether the test cases are classified by target, purpose and type, whether the test cases are automated and whether the test cases have already been executed. Because the test cases are referenced to requirements or change requests, it is also checked whether the referenced requirements actually exist. If so, a link is created between the test cases and the requirements. By inverting those links, it is determined which test cases belong to which requirements, or use cases and business rules. There should be no requirement or use case without a test case. All links between test cases and use cases and between test cases and requirements are documented by the tool.

Consistency check across all semantic levels

For the overall evaluation of a software product, it is necessary to summarize the test and measurement results of all partial products. A whole is only as good as the sum of its individual parts plus all relationships between the individual parts.

Software systems are more than just code. They consist of the requirements documents, the design models, the code sections, and the test cases. These layers must be complete and consistent. Therefore, in addition to testing individual artifacts, there must be a test of their consistency. It must be possible to trace the code modules back to the architecture and requirements description above them. Either this is done via common identifiers, via comments that refer to requirement elements, or via the test cases. Test cases link the code modules to the requirements. Each requirement has a test case that confirms the fulfillment of that requirement, and that fulfillment takes place in the code. The quality of the overall system depends on the visibility of all internal and external dependencies. Hence the effort to make those relationships visible.

The consistency check of the software already starts with the requirements documentation. Each functional requirement must be fulfilled by a use case and confirmed by at least one test case. Each use case must in turn be implemented by one or more code modules. Each code module or class must be associated with at least one use case. Static analysis alone makes it possible to detect and register these links. Subsequently, the missing links can be identified as quality defects. Typical consistency defects are:

- Requirements not met by any use case.

- Requirements that are not tested by any test case.

- Use cases that do not meet any requirements.

- Use cases that are not implemented by any code module.

- Code modules that cannot be assigned to a use case.

- Code modules that do not have test cases.

The responsible tester or quality inspector gets a report about the missing relationships in the system and can refer the developers to it without having to test.

The importance of software metrics

Completeness and consistency make up one side of static software quality assurance. It is realized by comparing the measurement results. The other side is conformity with the general and product-specific quality requirements. These requirements are not only expressed in the requirements documents, but also in the numerous publications on the subject of software development, in which 50 years of experience with the construction of software systems are documented.

The painful experience with numerous bad solutions shows what a good solution should look like. The rules for a good solution are formulated in metrics, which set a kind of target benchmark for the software. A metric is nothing more than a quantified empirical value (e.g., software modules should only be loosely coupled so that each can be further developed on its own without affecting the others, or software requirements should be formulated so precisely that no misunderstandings can arise). Tom McCabe's much-cited cyclomatic number is ultimately just an experience rule for constraining flow complexity. The same is true for the other measures such as inheritance depth and class size. The implementation of such empirical rules is best evaluated using quantified measures (cf. 8).

The goal would have to be to combine the measured values from the analysis of all software artifact types in a central metrics database and to compare them there. A number database of this kind would need, on the one hand, several import interfaces to include the measured values from the various analysis tools and, on the other hand, a graphical user interface to present users with diverse views of the numbers. The aim is to express the quality of the software in numbers (cf. 9). The metrics database system of the author Sneed has already proven itself for this purpose in several evaluation projects. In these projects, software systems with up to three million code statements and 40,000 FunctionPoints were tested and measured in order to draw conclusions for the further development of those systems.

Experience with analytical quality assurance

Many decision makers in IT are skeptical about the topic of "static software analysis". They do not recognize the connection between the quality of the system design and the quality of the system behavior. Consequently, they focus their attention and also their resources on what they understand, namely empirical quality assurance - testing according to the motto "the proof of the pudding is in the eating". What they overlook is that much of the software's behavioral problems are due to its design. Design flaws often lead to behavioral flaws. It is much cheaper to uncover such flaws through static analysis than in an elaborate test. This is emphasized by Paul Duvall in his book on "Continuous Integration" (cf. 10).

The other goal of analytical quality assurance - ensuring further development - is often left out entirely. Software executives are too busy with their day-to-day problems to take a look at the future.

In doing so, they overlook the fact that the causes of their day-to-day problems lie largely in the short-sightedness of the past. If they invested more in ensuring system quality, they would have fewer problems later with the maintenance and further development of their systems.

The means of analytical quality assurance are now so advanced that the costs are of little consequence. They are minimal compared to the benefits that such analyses bring. It is therefore to be expected that analytical quality assurance will become increasingly important in the future. Although it will never completely replace testing, it can reveal many quality defects that never come to light in testing.

The article was published in the 04/2014 issue of OBJEKTspektrum.

- H. Sneed, Software Quality Assurance, Rudolf Müller Verlag 1988

- S.H. Kan, In-Process Metrics for Software Testing, in: IBM Systems Journal, Vol. 37, No. 3, 1998.

- T. Gilb, D. Graham, Software Inspection Techniques, Addison-Wesley 1993

- C. Rupp, M. Recknagel, Measurable Quality in Requirements Documents, in: OBJEKTspektrum 4/2006

- S. Chidamer, C. Kemerer, A Metrics Suite for object-oriented Design, in: IEEE Trans on SE, Vol. 20, No. 6, 1994.

- F. Simon, O. Seng, T. Mohaupt, Code Quality Management, dpunkt.verlag 2006

- R. Martin, Clean Code - Refactoring, Patterns, Testing and Techniques for Clean Code, MITB Verlag 2009

- R. Dumke, C. Ebert, J. Heidrich, C. Wille, Measurement and Evaluation of Software, in: Informatikspektrum, Vol. 36, No. 6, 2013.

- H. Sneed, M. Baumgartner, R. Seidl, Software in Zahlen, Hanser Verlag 2011

- P. Duval, S. Matyas, G. Glover, Continuous Integration, in: M. Fowler Signature Books, Addison-Wesley 2012