The greatest barrier to the use of web services is the problem of testing them. This contribution deals with that problem. The solution lies in the ability to simulate the usage of the services. Requests must be generated and responses must be validated automatically in a fast and reliable manner. To accomplish that the authors have developed a tool – WSDLTest, which uses a test data description language based on pre and post condition assertions for both generating WSDL requests and validating WSDL responses. WSDLTest is part of a larger complex tool set – DataTest – for creating and processing system test data. The architecture and functionality of that tool as well as the experience gained from using it are presented here.

Emergence of Web

Web services are becoming increasingly important to the IT-Business, especially since the advent of service oriented architecture. IT users are looking for a way to increase the flexibility of their IT systems so as to be able to react quickly to changes in their business environment. If a competitor comes up with a new marketing approach, they have to be able to follow that approach in a short time. Adaptability of the IT systems has become critical to the survival of a company. If new laws are legislated, such as the Sorbane Oxley Act or Basel-II, companies have to be able to implement it within months. Changes to laws and regulations cannot be postponed. They have to be implemented by a given deadline, which is often only a short time away.

Under such time pressure, it is no longer possible to plan and organize long running projects. It is necessary to design and assemble a working solution within a limited time. This requirement for immediate response presupposes the existence of reusable components, which can be glued together within a standard framework to support a customized business process. This standard framework is very often a service-oriented-architecture such as that offered by IBM, Oracle and SAP 1. The components are the web services; the overlying business process can be defined with the business process execution language BPEL 2. The glue for binding the business process to the web services as well as to link the web services to one another is the web service description language – WSDL 3. The web service components themselves are derived from various sources. Some are bought, some are taken from the open source community, some are newly developed and others are taken from the existing software systems, i.e. they are recycled to be reused in the new environment. Normally, this entails wrapping them 4.

Necessity for testing Web Services

Irregardless of where they come from, no one can ensure that the web service components will work as one might expect. Even those which are bought may not fit exactly to the task at hand. The fact that they are not compatible can lead to serious interaction errors. The recycled components may be even worse. Legacy programs tend to contain many hidden errors, which in a given context counterbalance each other. However, when moved to another environment to perform a slightly different function, the errors suddenly emerge to the surface. The same can happen with open source components. Perry and Kaiser have demonstrated that the correctness of a component in one environment will not hold for another environment. Therefore, components have to be retested for every environment in which they are reused 5.

The specific problems associated with the testing of web applications have been addressed by Nguyen. The complexity of the architecture with the interaction between various distributed components – web browser, web server, data server, middleware, application server, etc. – brings with it many new sources of potential errors. The many possible interactions between the components combined with the many different parameters also increase the need for more test cases, which in turns drives up the cost of testing. The only way to cope with these increased costs is through test automation 6.

In the case of self developed services, the reliability problem is the same as with all new software. They have to be subjected to extensive testing at all levels – at the unit level, at the component level, and, finally, at the system level. Experience with new systems shows that the error rate of newly developed software varies between 3 and 6 errors per 1000 statements 7. These errors have to be located and removed before the software goes into production. A significant portion of these errors are due to false assumptions the developers have about the nature of the task and the behavior of the environment. Such errors can only be uncovered by testing in the target environment – with data produced by others with a different perspective on the requirements. This is the primary rational for independent testers.

Regardless of where web services come from, they should go through an independent testing process, not only individually, but also in conjunction with one another. This process should be well defined and supported by automated tools, so that it is quick, thorough and transparent. Transparency is of particular importance in testing web services so that test cases can be traced and intermediate results examined. Due to the volume of test data required, it is also necessary to automatically generate the inputs and to automatically validate the outputs. By generating varying combinations of representative test data, a high rate of functional coverage is attained. By comparing the test results with expected results, a high degree of correctness is ensured 8.

Existing tools for testing Web Services

There is no lack of tools for testing web services. In fact, the market is full of them. The problem is not so much with the quantity, but with the quality of the tools. Most of them are recent developments which have yet to mature. They are also difficult to adjust to the local conditions and they require users to submit data via the web client user interface. Testing through the user interface is not the most effective means of testing web services as has been pointed out by R. Martin in a recent contribution to the IEEE Software Magazine. He suggests using a testbus to bypass the user interface and to test the services directly 9. This is the approach which has been followed by the authors.

Typical of the tools on the market is the Mercury tool „Quicktest Professional”. It allows the users to fill out a web page and to submit it. It then follows the request from the client work station through the network. This is done by instrumenting the SOAP message. The message is traced to the web service that processes it. If that web service invokes another web service then the link to that service is followed. The contents of each WSDL interface is recorded and kept in a trace file. In this way, the tester is able to trace the path of the web service request through the architecture and to examine the message contents at different stages of processing 10.

Parasoft offers a similar solution, however rather than starting the request from a web client, it generates requests from the business process procedures written in BPEL. This creates, on the one hand, a larger volume of data while, on the other hand, simulating real world conditions. It is expected that most requests for web services will come from the business process scripts which are driving the business processes. BPEL language has been developed for that purpose, so it is natural to test with it. What is missing in the Parasoft solution is the capability of verifying the responses. They have to be inspected visually 11.

One of the pioneers in web testing is the Company Empirix. The eTester tool from Empirix allows testers to simulate the business processes using the web clients. Their requests are recorded and translated into test scripts. The testers can then alter and vary the scripts to mutate one request into several variations for making a comprehensive functional test. The scripts are in Visual Basic for Applications so it is easy for any person familiar with VB to work with them. With the scripts it is further possible to verify the response results against the expected results. Unexpected results are sorted out and fed to the error reporting system 12.

Other testing companies such as Software Research Associates, Logica and Compuware are all working on similar approaches, so it is only a question of time until the market is flooded with web service testing tools. After that it will take some time before the desired level of tool quality is reached. Until this is the case, there is still some potential for customized solutions such as the one described in this paper. The various approaches to test automation are covered by Graham and Fewster 13.

The WSDLTest Approach

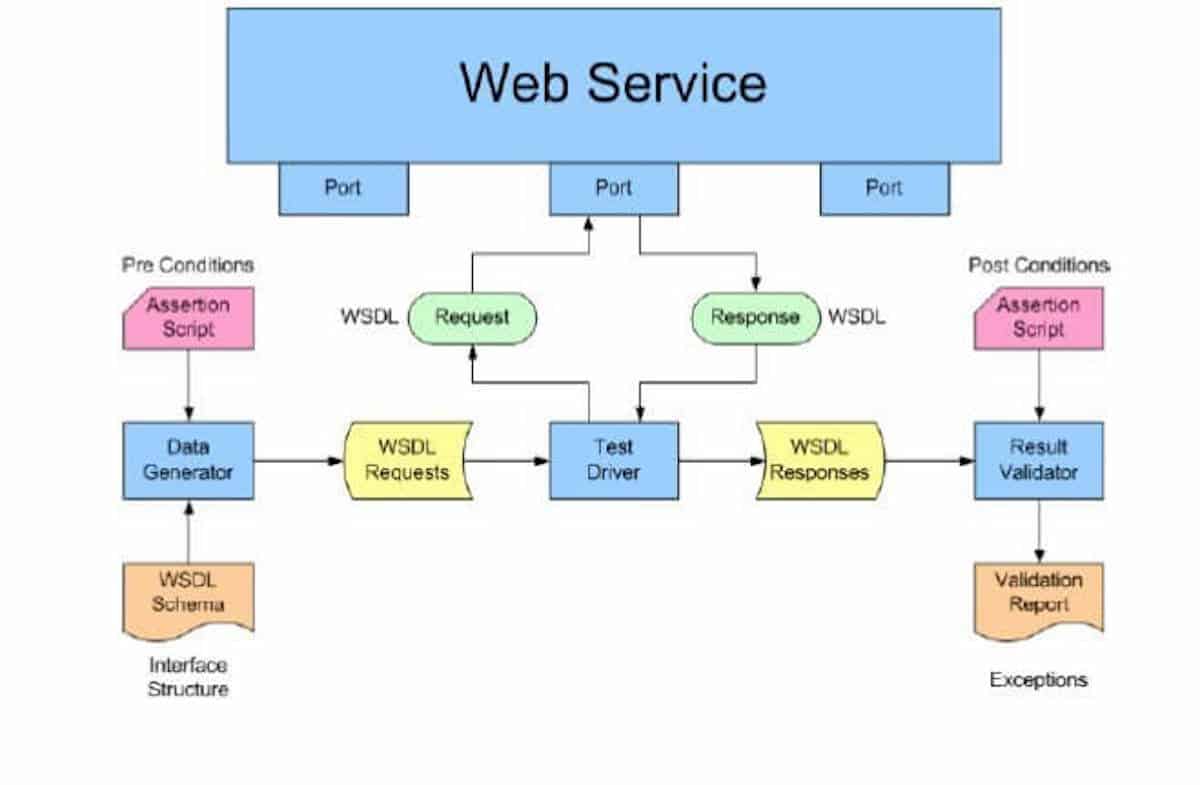

The WSDLTest tool takes a slightly different approach than the other commercial web service testing tools. It is based on the schema of the WSDL description, i.e. it starts with a static analysis of the schema. From that schema two objects are generated. One is a service request template. The other is a test script. The test script allows the user to manipulate the arguments in the web service request template. It also allows the user to verify the results in the web server response. The test driver is a separate tool which reads and dispatches the web service requests and which receives and stores the web service responses.

The Motivation for developing this Tool

It is often the circumstances of a project which motivate the development of a tool. In this case the project was to test an eGovernment website. The general user, the citizen, was to access the website through the standard web user interface. However, the local governments had IT systems, which also needed to access the site in order to obtain information from the central state database. For this purpose it was decided to offer them a web service interface. Altogether nine different services were defined, each with it’s own request and response formats.

The user interface to the eGovernment website was tested manually by human testers simulating the behavior of potential users. For the web services a tool was needed to simulate the behavior of the user programs by automatically generating typical requests and submitting them to the web service. Since the responses from the web service are not readily visible, it was also necessary to automatically validate the responses. Thus the motivation for developing the tool could be summarized as follows:

- Web Services can not be trusted, therefore they must be subjected to intensive testing

- All requests with all representative combinations of arguments should be tested

- All responses with all representative result states should be validated

- To test Web Services, it is necessary to generate WSDL requests with specific arguments for verifying target functions

- To verify the correctness of Web Services, it is necessary to validate the WSDL responses against expected results

Generating a Template Request from the WSDL Schema

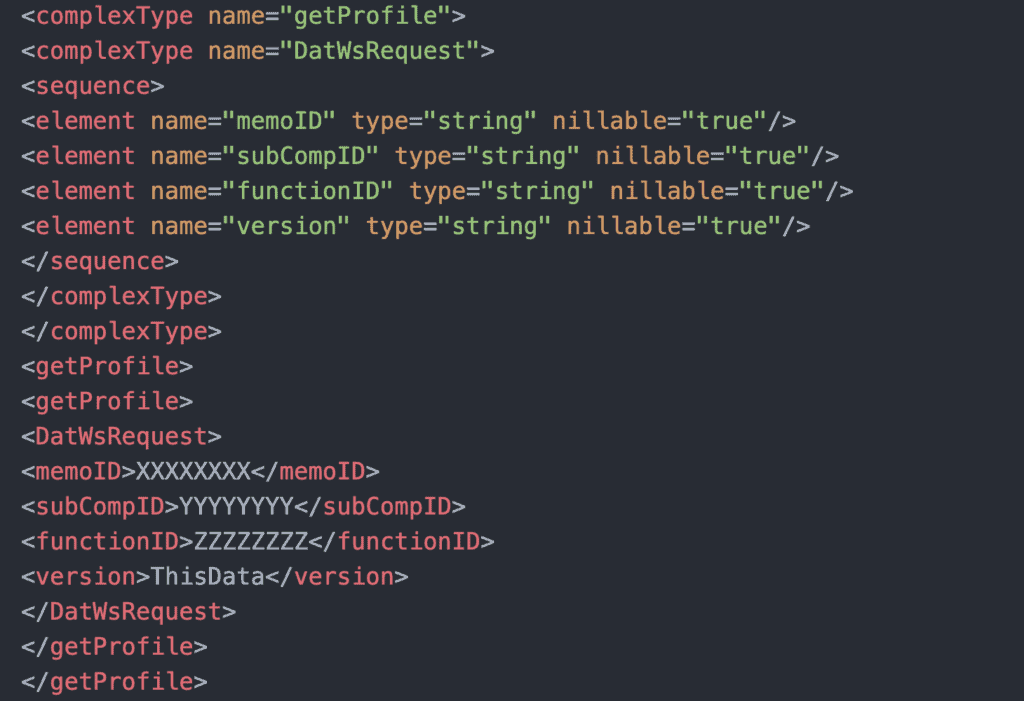

All tests are a test against something. There has to be a source of the test data and there has to be an oracle to compare the test results against 14. In the case of WSDLTest the oracle is the WSDL schema. That schema is either generated automatically from the interface design or it is written manually by the developer. As a third and more advanced alternative, it can be created from the BPEL process description. Irregardless of how it is created, the schema defines the basic complex data types in accordance with the rules of the XML schema standard. Complex data types can include other complex data types so that the data tree is represented with single and multiple occurrences of the tree nodes. The schema then defines the base nodes, i.e. the tops of the tree and their sequence. These are the actual parameters. The following example is an exert taken from the type definitions of an eGovernment Webservice-schema.

Following the parameter description come the message descriptions which identify the names and the component parts of each message whether it be a request or a response. After that follows the port type definitions. Each service operation to be invoked is listed out with the names of its input and output messages. These message names are references to the messages defined before, which again are references to the parameters defined before that. After the port types come the bindings describing the SOAP prototypes composed of service operations. At the end, the web service interface is given a name.A WSDL schema is a tree structure where the SOAP prototypes refer to the service operations which in turn refer to the logical messages which in turn refer to the parameters which refer to the various data types. The data types may in turn refer to one another. Parsing this tree is called tree walking 15. The parser selects a top node and follows it down through all of its branches collecting all of the subordinate nodes on the way down. At the bottom of each branch it will find the basic data types such as integers, booleans and strings. WSDLTest goes a step further by assigning each basic data type a set of representative data values. For instance integer values are assigned a range from 0 to 10000 and string values are assigned varying character combinations. These representative data sets are stored in tables and can be edited by the user prior to generating the test data. Thus the complete WSDL structure of the WSDL interface is as follows:

Web service interface SOAP prototypes service operations logical messages parameters data types elementary data types representative values

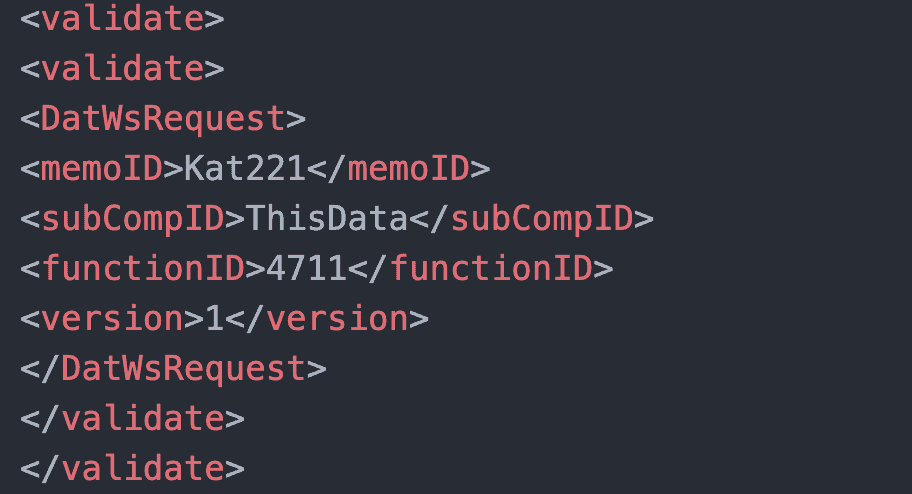

The task of the data generator is to walk the WSDL schema tree down to the level of the basic data types and to select representative values for that type. The values are selected randomly from the set of possible values. From these values an XML data group is created such as follows:

In this way a WSDL service request file with sample data is generated and stored for future use. Simultaneously, a test script is created which allows the tester to override the values originally generated. This script is a template with the data element names and their values.

By means of altering the script, the tester can now alter the values of the service request. Since a script is also generated for the output data, the tester is given a template for verifying the service responses.

We see here the results of the schema analysis, namely a WSDL template which serves as an input to the final WSDL test data generation. A WSDL request is a cascading data structure which begins with type definitions which are referred to by the message definitions, which are referred to by the input/output operations assigned to a port. Since most of the code such as that defining the ports and the SOAP containers is of a purely technical nature and also highly repetitive, it is practical to have a WSDL template to copy and adapt to the particular web service request.

Writing precondition assertions

The test scripts for WSDLTest are sequences of Pre Condition assertions defining possible states of the web service request. It should be noted that the same assertion script language is also used for testing other types of data such as relational databases, XML files and text files. A state is a combination of given values for the data types specified in the WSDL interface definition. The values are assigned to the individual data elements, but their assignment may be mutually dependent so that a particular combination of values can be determined by the tester e.g.:

assert new.Account_Status = "y" if(old.Account_Balance < "0");

For assigning test data values there are six different assertion types:

- the assignment of another existing data value from the same interface

- the assignment of a constant value

- the assignment of a set of alternate values

- the assignment of a value range

- the assignment of a concatenated value

- the assignment of a computed value

The assignment of another existing data value is done by referring to that value. The value referred to must be within the same WSDL.

assert new.Account_Owner = old.Customer_Name;

The assignment of a constant value is done by giving the value as a literal in that statement. All literals are enclosed in quotes.

assert new.Account_Balance = "0";

The assignment of a set of alternate values is made by means of an enumeration. The enumerated values are separated by an or sign “!”.

assert new.Account_Status = "0" ! "1" ! "2" ! "3";

According to this assertion the values will be assigned alternately starting with 0. The first account occurrence will have the status 0, the second the status 1, the third the status 2 and so on.

The assignment of a value range is for the purpose of boundary analysis. It is made by giving the lower and upper bound of a numeric range.

assert new.Account_Status = ["1" : "5"];

This will cause the assignment of the values 0, 1, 2, 4, 5, 6 in alternating sequence. The first account occurrence will have the status 0, the second 1, the third 2, the fourth 4, the fifth 5, and the sixth 6.

The assignment of a concatenated value is done by joining two or more existing values with two or more constant values in a single string.

assert new.Account_Owner =

"Mr. " | Customer_Name | " from " | Customer_City;

The assignment of a computed value is given as an arithmetic expression in which the arguments may be existing values or constants#

assert new.Account_Balance = old.Account_Balance / "2" + "1";

The assert assignments can be conditional or unconditional. If they are conditional they are followed by a logical expression comparing a data variable of the WSDL interface with another data variable of the same interface or with a constant value.

assert new.Account_Owner = "Smith"

if(old.Account_Number = "100922" &

old.Account_Balance > "1000");

The tester adapts the assertion statements in the script generated from the WSDL script to provide a representative test data profile including equivalence classes and boundary analysis as well as progressive and degressive value sequences. The goal is to manipulate the input data so that a wide range of representative service requests can be tested. To achieve this, tester should be familiar with what the web service is supposed to do and to assign the data values accordingly.

file: DAT-WS;

if (object = "DatWsRequest");

assert new.memoID = old.memoID;

assert new.subCompID = old.subCompID;

assert new.functionID = "4711";

assert new.version = "1";

endObject;

if (object = "DatWSProfile");

assert new.attributeName = old.attributeName;

assert new.typ = 21 ! 22 ! 23;

assert new.value = "Sneed";

endObject;

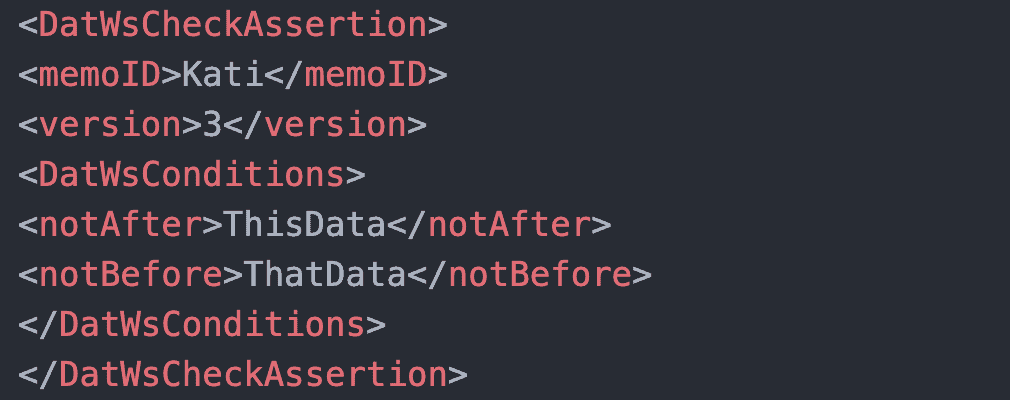

if (object = "DatWsCheckAssertion");

assert new.memoID = "Kati";

assert new.version = "3";

assert new.notAfter = "ThisData";

assert new.notBefore = "ThatData";

endObject;

end;

Overriding the Template Data

Once the assertion scripts are available it is possible to overwrite the template of a web service request by the asserted data. This is the task of the XMLGen module. It matches the WSDL file generated by the WSDLGen module with the assertion script written by the tester. The data names in the assertion script are checked against the WSDL schema and the assertions compiled into symbol tables. There are different tables for the constants, the variables, the assignments and the conditions.

After the assertion script has been compiled, the corresponding WSDL file is read and the data values are replaced by the values derived from the assertions. If the assertion refers to a constant, the constant replaces the existing value of the XML data element with the name corresponding to that in the assertion. If the assertion refers to a variable, the value of the XML data element with that variable name is moved to the target data element. Alternate values are assigned one after the other in ascending order until the last value has been reached, then it starts again with the first value. Range values are assigned as the boundary values plus and minus one.

In the end, a sequence of web service requests exists with varying representative states combining the original generated data with the data assigned by the assertion scripts. By altering the assertions, the tester can alter the states of the requests, thus ensuring a maximum data coverage.

Activating the Web Services

Having generated the web service requests, it is now possible to dispatch them to the server. This is the task of the WS test driver. It is a simulated BPEL process with a loop construct. The loop is driven by a list of web services ordered by the sequence in which they should be invoked. The tester can edit the list to alter the sequence in accordance with the test requirements.

From the list the test driver takes the name of the next web service to be invoked. It then reads the generated WSDL file with the name of that web service and dispatches a series of requests to the specified service. When testing in synchronic mode, it will wait until a response is received before dispatching the next request. When testing in asynchronic mode, it will dispatch several requests until it comes to a wait command in the web service list. At this point it will wait until it has received responses for all of the services dispatched before continuing with the next request.

The responses are accepted and stored in separate response files to be verified later by a post processor. It is not the job of the driver to create requests or to check the responses. The requests are created by the preprocessor. The driver only dispatches them. The responses are checked by the post processor. The driver only stores them. In this way the role of the test driver is reduced to that of a simple dispatcher. BPEL procedures are very appropriate for that, since they have all of the necessary features for invoking web services within a predefined work flow.

Writing Post Condition Assertions

The same assertion language is used for verifying the web service responses as is used for constructing the web service requests. Only here, the assertions have an inverse meaning. Data is not assigned from existing variables and constants, but compared with the data values of previous responses or with constant values.

The comparison with another existing data value is done by referring to that value.

assert new.Account_Owner = old.Account_Owner;

The comparison with a constant value is done by giving the value as a literal in that statement. As at the assignment, literals are enclosed in quotes.

assert new.Account_Balance = "33.50";

To check whether the response data matches at least one of a list of values, the alternate-assertion is used.

assert new.Account_Status = "0" ! "1" ! "2" ! "3" ;

An assertion can also check, whether the response data is within a range of values (e.g. between 100 and 500).

assert new.Account_Balance = ["100.00" : "500.00"];

The assertion for comparing can also comprise computed values. First the expression is evaluated, then checked again the response data.

assert new.Account_Balance = old.Account_Balance - "50";

As in the case of the pre conditions, the post conditions can be unconditional or conditional. If they are conditional then they are qualified by a logical if-expression. The expression can contain a comparison of two variables in the web service response or of a variable in the response with a constant value.

assert new.Account_Status = "3"

if(old.Account_Balance < "1000");

In all cases, if the assertion is not true, an error message is recorded displaying both the expected and the actual value. Provided there is an assertion check for each attribute of the WSDL response, the verification of the response will be 100%. It is not, however, required to check every attribute. The tester may decide to restrict the check to only critical variables. If so, the data coverage will be less. Data coverage is measured in terms of the number of asserted results relative to the sum of all results.

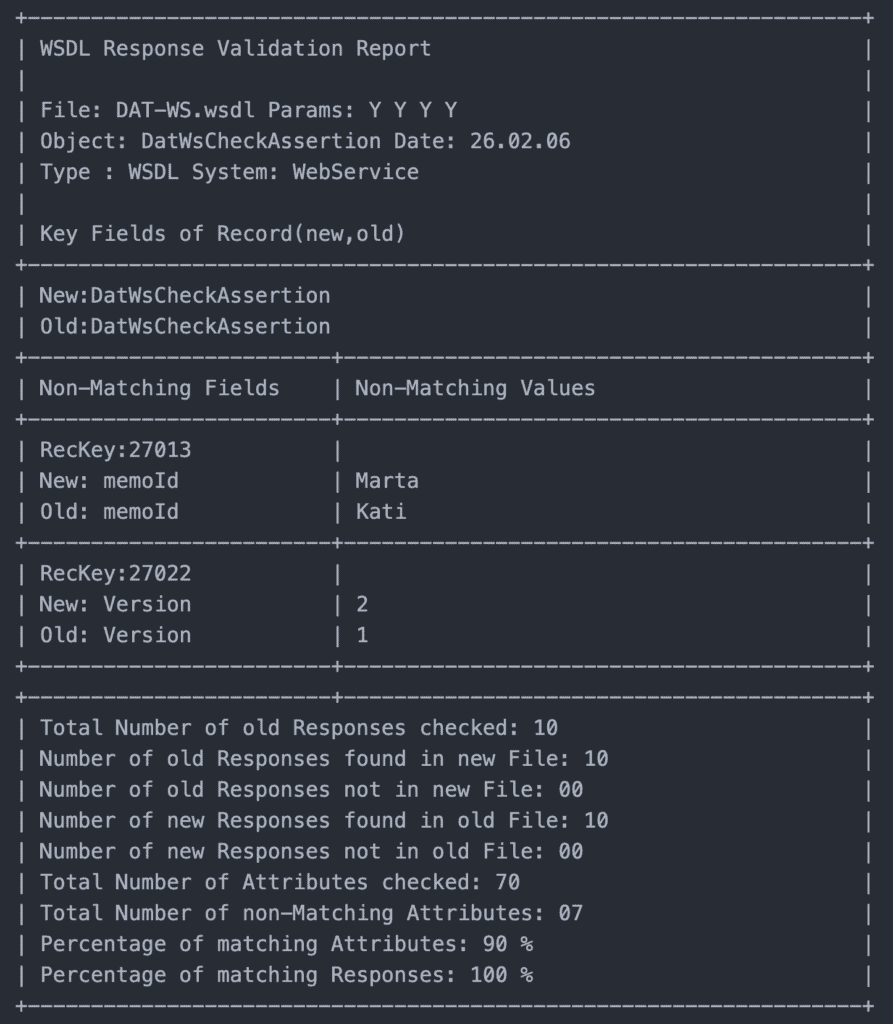

Validation of the answers

The module XMLVal fulfills the task of verifying the web service results. For this it must first compile the post condition assertions into internal tables of variable references, constants, enumerations and ranges. In doing so, it checksthe data names and types against the names and types declared in the WSDL schema to ensure consistency.

Having succeeded in compiling the assertion scripts, the tool then uses the compiled table to check the web service response. First, the expected values are stored in a table with a key for each object occurrence. Secondly, it parses the WSDL result file matching the objects there with the objects in the assertion tables. If a match is found, the attributes of that object are extracted and their values compared with the expected values. If they do not match the verification condition, the data names and values are written out in a list of non matching results. It is then the task of the tester to explore why the results do not match. In addition to listing out the assertion violations, the XMLVal tool also produces some statistics on the degree of data coverage and the degree of correctness.

Components of the WSDLTest Tool

The WSDLTest tool was put together from several components. These are:

- GUI shell

- XML File Writer

- XML File Reader

- assertion compiler

- XSD Tree Walker

- WSDL Analyzer

- table processor

- error handler

- Random Request Data Generator

- Selective Request Generator Request Validator

- Validation Report Generator

- WS Test Driver

Some of these compoents were taken from an existing tool, others were newly developed. The concept of XML processing is published 16 and can be reused. The assertion language and the assertion compiler were taken from an earlier tool and extended. This allowed a prototype version to be ready for use in less than a month. Later, the tool was refined and extended. In its original form, the tool was structured as follows:

- The shell is implemented with Borland Delphi

- The core is implemented with Borland C++

- The tool uses no database, it only uses temporary work files

- The tool was designed to be run in a MS Windows environment or equivalent thereof

- The shell and core are connected via an XML parameter file

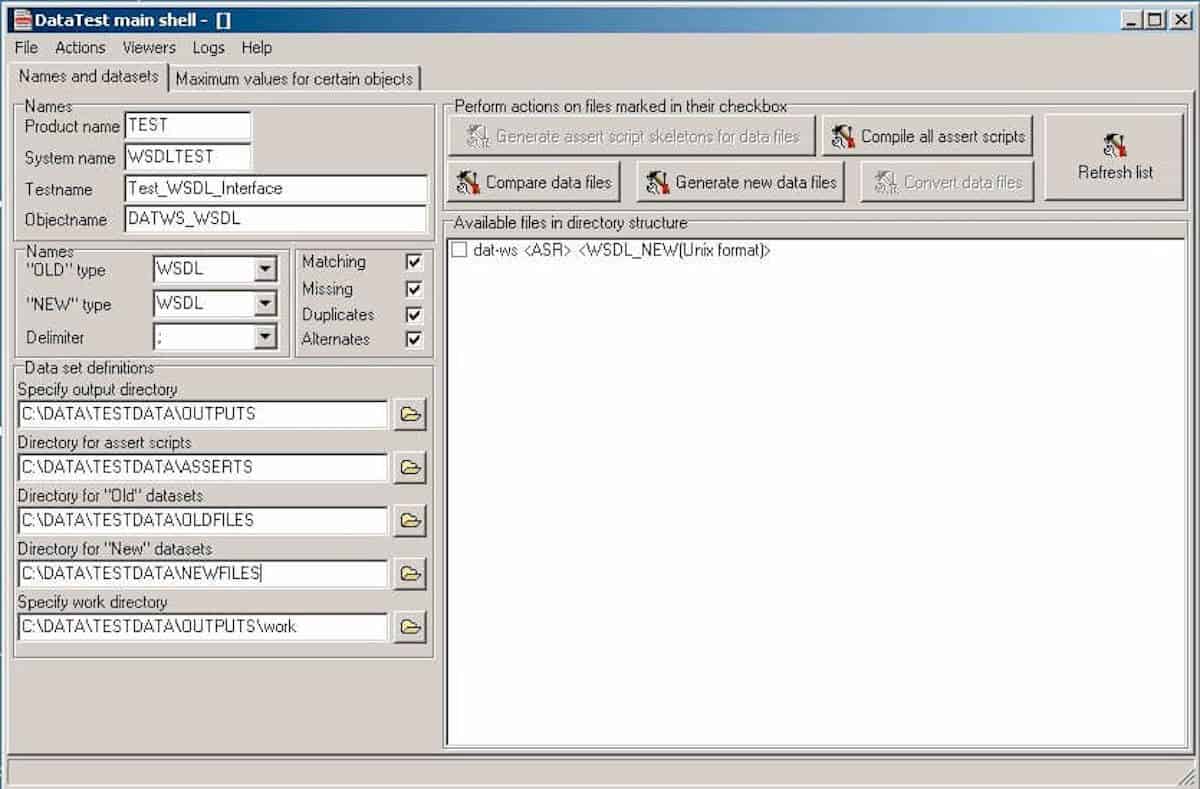

The User Interface

The Windows-Interface of WSDLTest is designed to accept parameters from the user, to select files from a directory and to invoke the backend processes

The three directories from which files can be selected are:

- the assertion directory with the assertion text files

- the old file directory with the test inputs – csv, sql, xml and wsdl files

- the new file directory with the test outputs – csv, xml and wsdl files

In addition there is an output directory where the protocols and reports are collected. It is essential that the file names are the same in all four directories, since this is how they are associated. Only the extension may vary. Thus, the actual response “Message.wsdl” is compared with the expected response “Message.wsdl” using the assertion script “Message.asr” to produce the report “Message.rep”. The assertion scripts have the extension .asr. The names of all files belonging to a particular project are displayed together with their types for the user to select from.

The Assertion Compiler

The assertion compiler was designed to read the assertion scripts and to translate them into internal tables which can be interpreted at test time. A total of nine tables are generated for each file or object. These are:

- A header table with information about the test object

- A key table with one entry for up to 10 keys used to relate the old and new files

- An assertion table with an entry for up to 80 assertion statements

- A condition table with an entry for each pre condition to be fulfilled

- A constant table with an entry for each constant value to be compared or generated

- An alternates table with an entry for each alternate value an attribute may have

- A concatenation table with an entry for each concatenated value

- A computational table with the operands and operators of the arithmetic expressions

- A replacement table with up to 20 fields, whose values can be substituted by other values

The assertion compilation has to take place before a file can be generated or validated. It is of course possible to compile many assertion scripts at one time before starting with the generation and validation of files. The results of the assertion compilation are written out in a log file which is displayed to the user at the end of each compile run.

The WSDL Request Generator

The request generator consists of three components:

- schema preprocessor

- random request generator

- data assigner

XML schemas are normally generated by some tool. This causes tags with many attributes to be written together into one line. To make the XML text more readable and easier to parse, the schema pre-processor splits up such long lines into many indented short lines. This simplifies the processing of the schema by the subsequent components.

The random request generator then creates a series of WSDL requests using constant values as data. The assigning of constants depends on the element type. String data is assigned from representative strings, numeric data by means of adding or subtracting constant intervals. Date and time values are taken from the clock. Within the request the data may be given as a variable between the tags of an element or as an attribute to an element. This poses a problem since attributes do not have types. They are per definition strings. So here only string values are assigned. The end result is a request in XML format with randomly assigned data elements and attributes.

The data assigner reads in the WSDL requests which were randomly generated and writes the same requests out again with adjusted data variables. In the assertion scripts the user assigns specific arguments to the request elements by name. By compiling the assertion scripts the names and arguments are stored in symbol tables. For each data element of the request the data assigner accesses the symbol tables and checks if that data element has been assigned an asserted value or set of values. If so the random value of that element is overwritten by the asserted value. If not, the random value remains. In this way the WSDL requests become a mixture of random values, representative values and boundary values. Through the assertions the tester can control what arguments are sent to the web service. The WSDL requests are stored in a temporary file where they are available to the request dispatcher. To distinguish between requests, each request is assigned a test case identifier as a unique key.

The WSDL Response Validator

The response validator consists of only two components:

- response preprocessor

- data checker

The response pre-processor works in a similar way as the schema pre-processor. It unravels the responses that come back from the web service to make them easier to process by the data checker. The responses are taken from the queuing file of the test dispatcher.

The data checker reads the responses and identifies the results either by their tag or by their attribute name. Each tag or attribute name is checked against the data names in the output assertion table. If a match is found the value of that element or attribute is compared against the asserted value. If the actual value varies from the asserted value a discrepancy is reported in the response validation report. This is repeated for every response.

In order to distinguish between responses the test case identifier assigned to the request is also inherited by the response so that each response is linked to a particular request. This enables the tester to include the test case identifier in the assertions and to compare responses based on the test case.

The WSDL Request Dispatcher

The WSDL request dispatcher is the only Java component. It takes the generated requests from the input queue file, puts them in a SOAP envelope and sends them to the desired web service. If there is a problem reaching the web service, it handles the exception. Otherwise, it simply records the exceptions and moves on to the next request. Responses are taken from the SOAP envelope and stored in the output queue file, where they are uniquely identified by the test case identifier.

The request dispatcher also records the time each test case is sent and the time the response is returned. These are then the start and end times of each test case. By instrumenting the methods of the web services with probes that record when they were executed, it is possible to associate specific methods with specific test cases. This makes it easier to identify errors in the web services. It also allows identification of specific methods that are traversed as a service moves through one or more web services. This is a topic for future research 17.

Experience with the WSDLTest Tool

The experiences with WSDLTest in an eGovernment project are encouraging. There, nine different web services were tested with an average of 22 requests per service. A total of 47 different responses were verified. Of these, 19 contained at least one erroneous result. Thus, of the more than 450 errors found in the overall project, about 23 were discovered in testing the Web services 18. It is difficult to say how many errors could have been found in testing by other means. To judge the efficiency of one approach, one would have to compare it to another. What can be said is that the test was relatively cheap and did not cost more than two weeks of effort for the testers.

It seems that the tool could become a suitable tool for testing web services as long as the WSDL interfaces are not too complex. If they are too complex, the task of writing the assertions becomes too difficult and errors occur. At this point, the tester cannot be sure whether an observed error is caused by the web service or by an incorrectly formulated assertion. A similar experience was reported from the U.S. ballistic missile defense project about 30 years ago. There, about 40% of the reported errors were actually errors in the test procedures 19. If you look at the test technology used in that project by the RXVP test lab, you find that the basic test methods - setting preconditions, checking postconditions, instrumenting software, monitoring test paths, and measuring test coverage - have changed little since the 1970s. Only the environment has changed.

Requirements for future work

Testing software systems is a complex job which has yet to be fully understood. There are many issues concerned, issues such as:

- specifying test cases

- generating test data

- monitoring test execution

- measuring test coverage

- validating test results

- tracking system errors, etc.

The tool WSDLTest only addresses two of these many issues – generating test data and validating test results. Another tool TextAnalyzer analyses the requirement documents to extract the functional and non functional test cases. These abstract test cases are then stored in a test case database. It would be necessary to somehow use these test cases to generate the pre and post condition assertions. That would entail bridging the gap between the requirement specification and the test specification. The greatest barrier to achieving this is the informality of the requirement specs. The question is one of deriving formal, detailed expressions from an abstract, informal description. It is the same problem as faced by the model driven development community.

Another direction for future work is test monitoring. It would be useful to trace the path of a web service request through the system. The tool for doing that is TestDocu, also from the authors. This tool instruments the server components to record what request they are currently working on. By treating a trace file it is possible to monitor the execution sequence of the web services, as there are often many services involved in the processing of one request. The biggest remaining task for the future is to integrate these various test tools so that they can be used as a whole rather than individually. This means setting up a generic testing framework with a common ontology and standardized interfaces between the individual tools.

There still remains the fundamental question of the extent to which testing should be automated. Perhaps it is not so wise to automate the entire web testing process, relying instead on the skills and creativity of the human tester. Automation often tends to hide important problems. The issue of test automation versus creative testing remains an important topic in the testing literature 20.

Conclusion

This paper has reported on a tool for supporting web service testing. The tool WSDLTest generates web service requests from the WSDL schemas and adjusts them in accordance with the pre condition assertions written by the tester. It dispatches the requests and captures the responses. After testing it then verifies the response contents against the post condition assertions composed by the tester.

The tool is still under development, but it has already been employed in an eGovernment project to expedite the testing of web services. Future work will be in the direction of linking this tool to other test tools supporting other test activities.

- Kratzig, D. / Banke, K. / Slama, D.: Enterprise SOA, Coad Series, Prentice-Hall Pub., Upper Saddle River, NJ, 2004, p. 6

- Juric, M.: Business Process Execution Language for Web Services, Packt Publishing, Birmingham, U.K., 2004, p.7

- Sneed, H.: " Integrating legacy Software into a Service oriented Architecture", Proc. of 10th European CSMR, IEEE Computer Society Press, Bari, March, 2006, p. 5.

- Tilley, S./ Gerdes, J./ Hamilton, T./ Huang, S./ Müller, H./Smith, D./Wong, K.: " On the business value and technical challenges of adapting Web services", Journal of Software Maintenance and Evolution, Vol. 16, No. 1, 2004, p. 31.

- Perry,D./Kaiser,G.: "Adequate Testing and object-oriented Programming", Vol. 5, No. 2, Jan. 1990, p. 13-19

- Nguyen, H.Q.: Testing Applications on the Web, John Wiley & Sons, New York, 2001, p. 11

- Musa, J./ Ackerman, A.: "Quantifying Software Validation", IEEE Software, May, 1989, p. 19

- Berg,H./Boebert,W./Franta,W./Moher,T.: Formal Methods of Program Verification and Specification, Prentice-Hall, Englewood Cliffs, 1982, p. 3.

- Martin, R.: "The Test Bus Imperative" IEEE Software, July, 2005, p. 65

- Editors: "Mercury Interactive simplifies functional testing" in Computer Weekly, No. 15, April, 2006, p. 22

- Editors: "Parasoft supports Web Service Testing", in Computer Weekly, No. 15, April, 2006, p. 24

- Empirix Inc: "e-Test Suite for integrated Web Testing", www.empirix.com

- Fewster,M./Graham,D.: Software Test Automation, Addison-Wesley, New York, 1999, p. 248

- Howden, W.: "Functional Program Testing", McGraw-Hill, New York, 1987, p. 123

- Bradley, N.: "The XML Companion" Addison-Wesley, Harlow, G.B., 2000, p. 116

- Walsch, B.: "Understanding XSLT, DOM and when to use each", SIGS DataCom, XML-ONE -Conference, Munich, July, 2001, p. 101

- Sneed, H. : Reverse Engineering of Test cases for selective Regression testing" Proc. Of CSMR-2004, IEEE Computer Society Press, Tampere, Finland, March, 2004, p. 146

- Sneed, H. "Testing an eGovernment Website" Proceedings of the 7th IEEE International Symposium on Web Site Evolution (WSE2005: Budapest, Hungary; September 26, 2005). P.3. IEEE Computer Society Press, 2005.

- Ramamoorthy, C.; Ho, S. "Testing Large Software with Automated Software Evaluation Systems." IEEE Transactions of Software Engineering. Vol. 1, pp. 46-58, March 1975.

- Sneed, H./Baumgartner, M./Seidl, R.: Der system testing" Hanser Verlag, Munich/Vienna, 2006