Application Value Assessment

Measuring, testing and assessing the quantity, quality and complexity of application systems forms a sound basis for IT strategy decisions. Particularly in the case of legacy systems with a large, grown code volume and a high diversity of languages, data can be determined in this way and comparability created that can be used for an evaluation of various further development scenarios (maintenance, migration, redevelopment, etc.). For this purpose, however, it is necessary to select the appropriate key figures and metrics from the very large number of possible ones.

Goals of a measurement project

Typical objectives pursued by an application systems measurement project are:

- The determination of the size, complexity and quality of the code of a system

- The development of a metrics database for ongoing quality assurance

- The identification and estimation of alternative development strategies

In order to be able to fulfill the first goal, the measurement of the system, it is necessary to structure the source code of the application and to spread it out for the measurement in such a way that a meaningful comparability of the measurement results is possible. A classification can be made e.g. according to languages (Cobol, PL1, C++, etc.) or also according to special technical areas. Subsequently, the individual areas can be compared with each other in terms of size, quality and complexity, and conspicuous features can be examined in more detail. The measurement is not only limited to program code, databases and user interfaces can also be measured.

The construction of a metrics database enables the ongoing measurement of the system over time. Thus, when changes or extensions are made to the system, it can be observed whether, for example, the overall quality has improved or deteriorated. Ideally, ongoing measurement is embedded in the quality assurance process.

The results of the measurement provide a uniform basis for evaluating various further development scenarios and estimating their effort. This can be used as a basis for future IT strategy. Possible scenarios are:

- Annual maintenance of the system

- New development of the system

- Migration

- Redevelopment

- Enclosure

Metrics selection

A prerequisite for any measurement project is the selection of the appropriate metrics. This selection is also the first step in the ISO9126 measurement process. However, the literature provides little guidance on which metrics to select. Software systems are complex constructs with many different properties that can be measured. In his book, Software Complexity Metrics, Horst Zuse identified more than 300 metrics alone. Each metric, or metric measures a different property, e.g., the McCabe metric measures internal flow complexity, the Halstead metric measures language complexity, or the Function Point metric measures the interactions between a system and its environment. Ultimately, it is a question of the objective of the measurement project and the nature of the system as to which metrics are most appropriate.

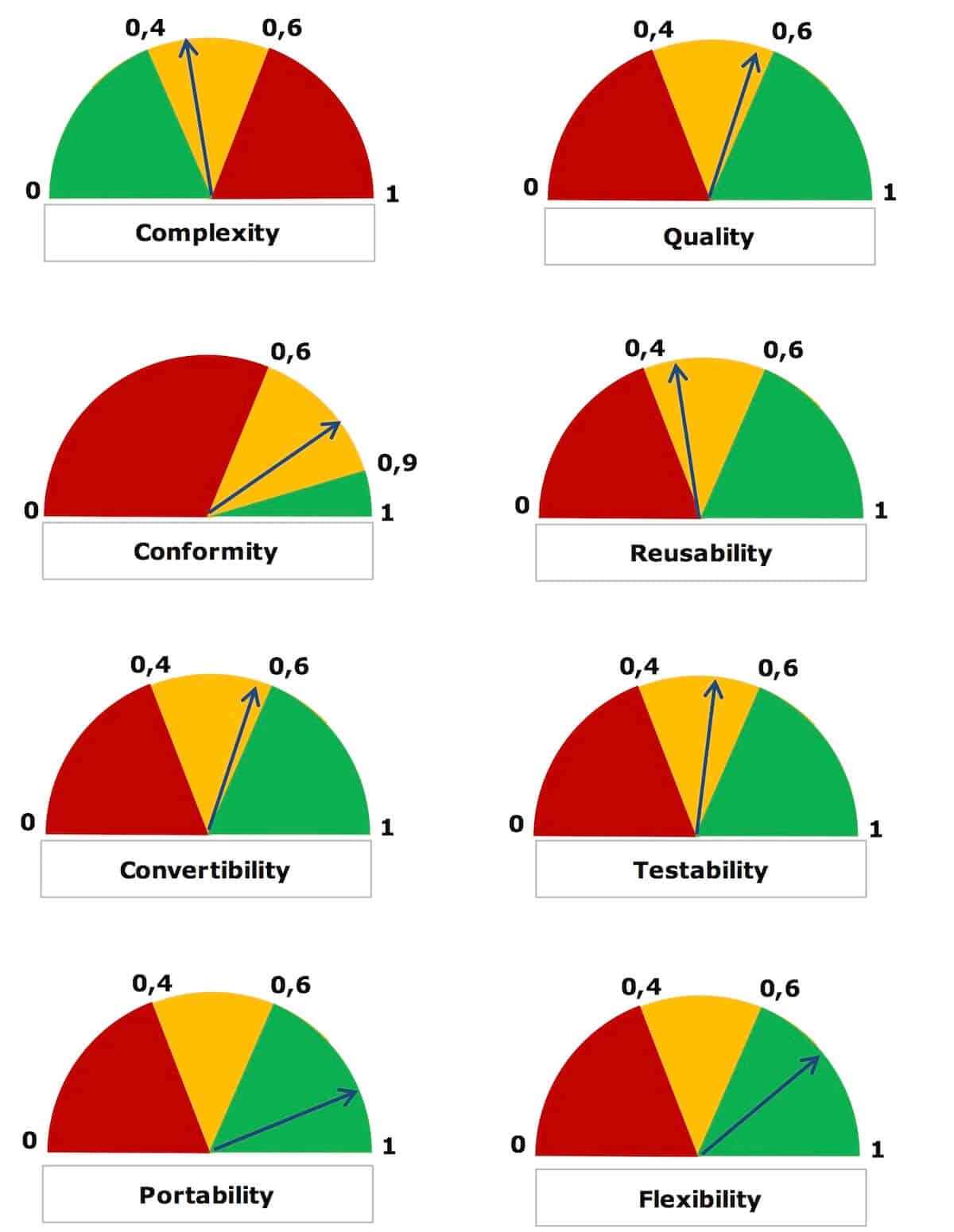

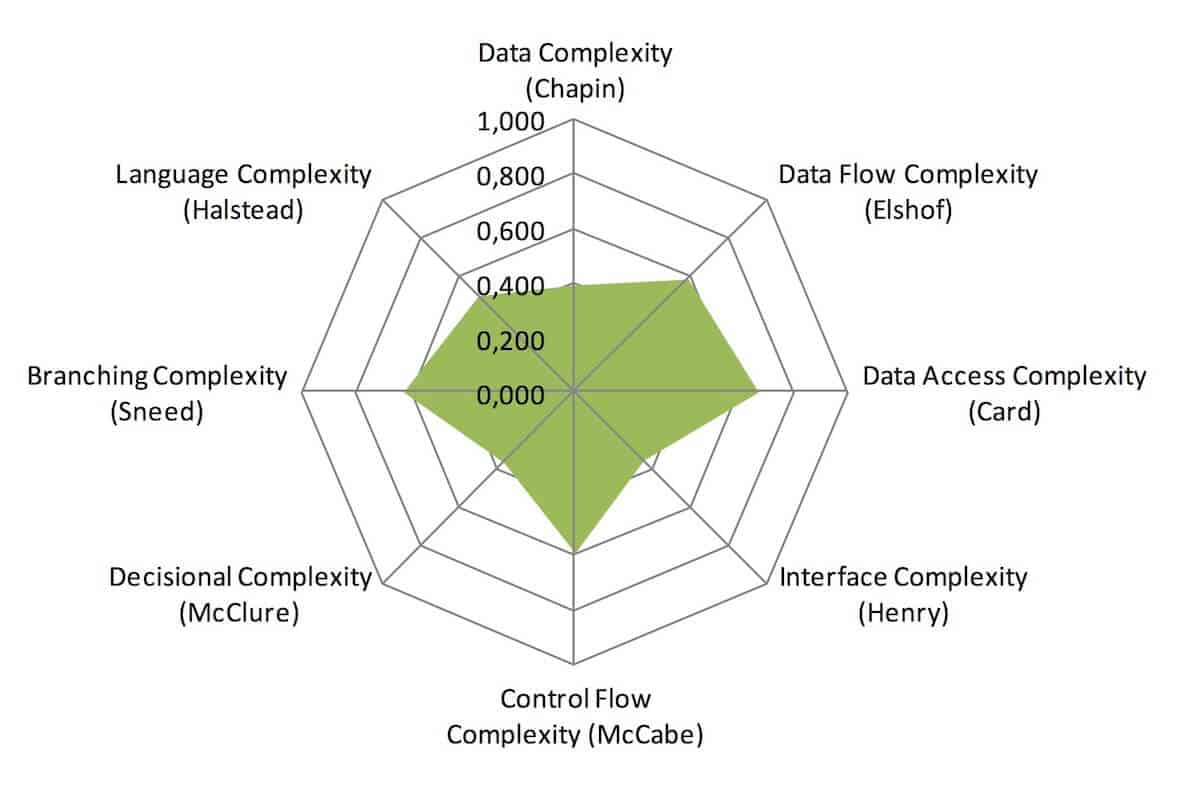

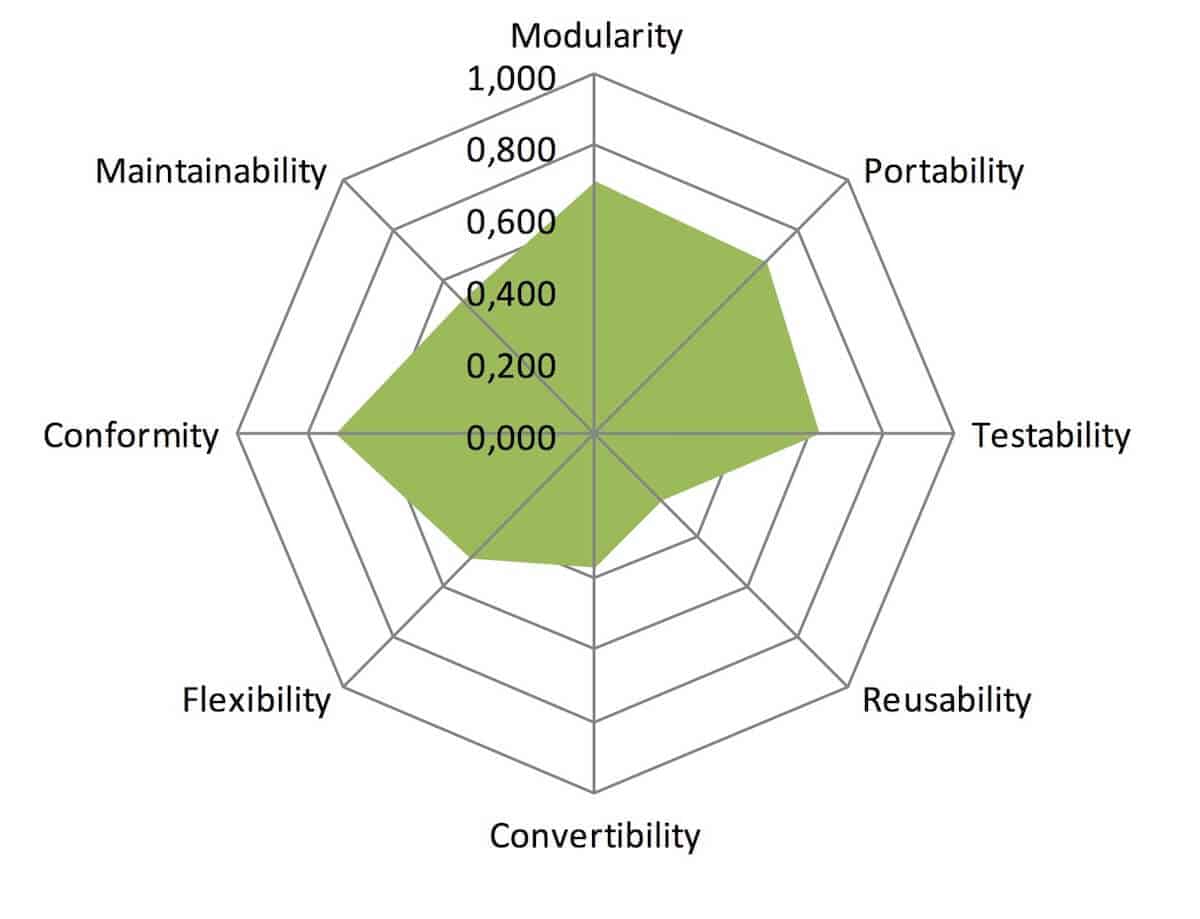

In the measurement projects carried out by one of the authors over the last 20 years, experience with a wide variety of systems has resulted in a compilation of size, complexity, and quality metrics that have proven themselves many times over in the analysis and evaluation of application systems. These include complexity metrics such as data complexity, control flow complexity, and language complexity, but also quality metrics such as those for measuring portability, maintainability, conformance, and testability.

The measurement process

The measurement process to perform the measurement, testing and evaluation of the system typically consists of the following steps:

- Metrics selection

- Structuring of the system to be measured

- Configuration of the tools, adaptation to local conditions (e.g.: own language constructs)

- Carrying out the survey

- Transfer of the measured values into a metrics database

- Evaluation of the measurement results

- Implementation of the estimates

Results

In addition to the metrics reports on the size, quality and complexity of the system and the subsystems/components as well as the effort estimates for various further development strategies, evaluations can be created to prepare the information for different target groups. This is done, for example, in the form of scorecards that relate the qualities and complexities to each other, or in management dashboards to provide an up-to-date overview of the system.