A software migration requires a different testing approach than a development project. This is because, as a rule, neither a requirements specification nor a design model exists as a basis for testing. This means that requirements-based and model-based test approaches are not suitable for migration projects. Instead, the legacy system can be used as a test oracle for the migration test. The decisive test criterion is then the complete functional equality of the legacy and migrated system - and special methods and tools must be used for this.

There are, however, project types where test effort and testing time can be calculated and the test performed on a fixed- price basis. One such type is a well-specified, stable development project, where the product produced can be tested against the requirement specification. The requirements documents can be analyzed either manually or automatically by a text analysis tool to extract data (e.g. function or test points) for the test cost estimation.

Another type is maintenance or reengineering project, where the software is only slightly changed. Here the test effort can be predicted on the basis of the delta between the original version and the altered version.

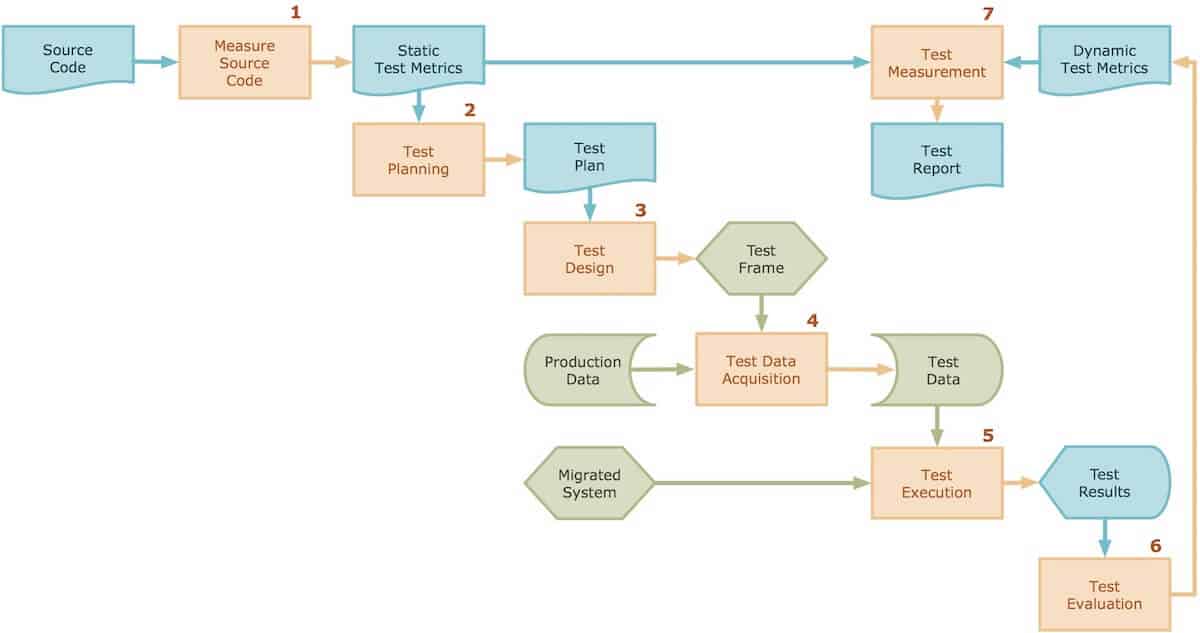

A third type is a migration project. Migration testing: the whole system is retested against the previous system. The basis for the cost calculation is the source code of the previous system. 1.

A migration test must begin by measuring the source code to be migrated. This is necessary in order to know exactly what has to be tested and what effort will be involved. Only after the software has been measured, it is it possible to perform the next step, which is to plan the test.

The second step, test planning, includes the setting up of a budget and a time schedule as well as in detailing the tasks to be performed and the results to be delivered.

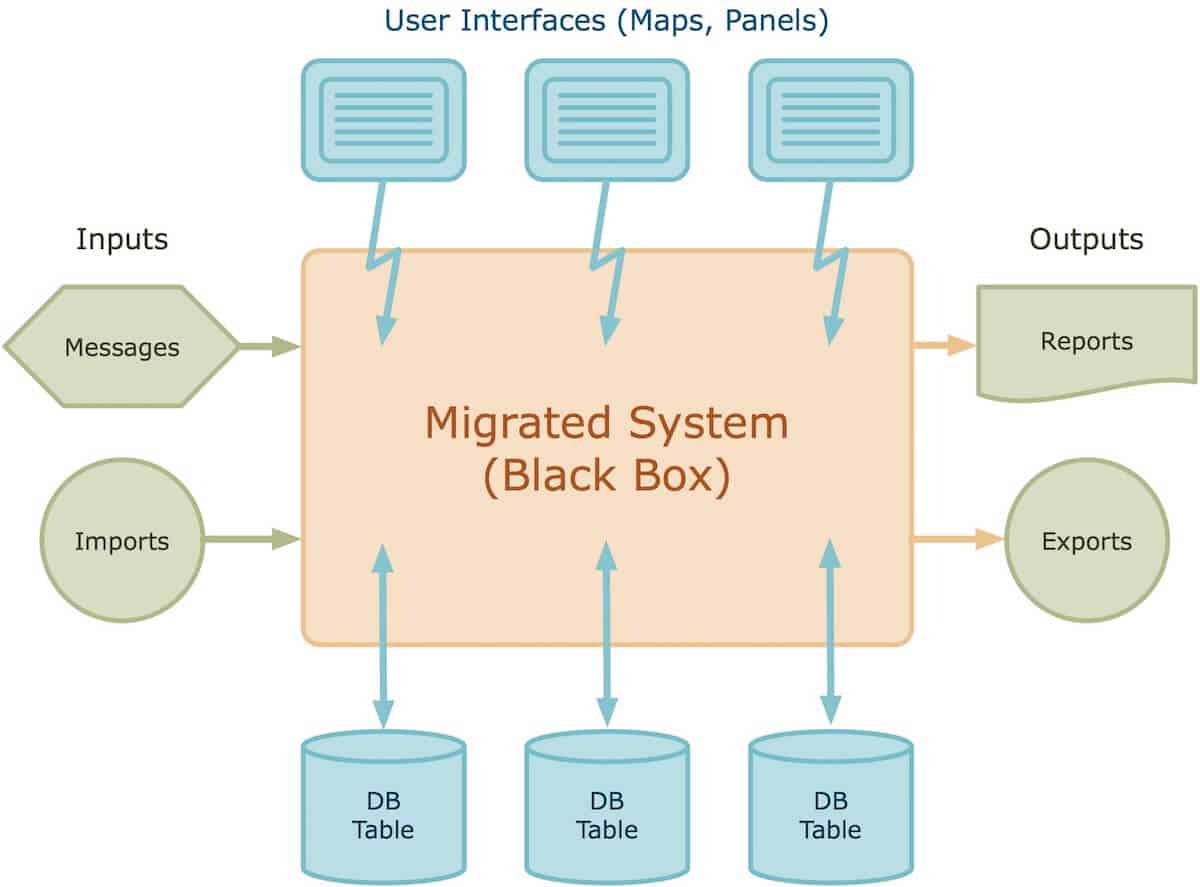

The third step is to design migration testing. It is important here to identify each and every test object, i.e. user interface, database table, system interface and report used by the system. The test procedure, i.e. the test steps and their sequence, also have to be defined.

The fourth step is acquiring the test data. Normally, the test data for a migration is taken from production and possibly scrambled to avoid privacy problems. Test data is acquired by executing the old system in a controlled environment and recording both the inputs and the outputs as well as the database states. The problems lie in handling such large volumes of data, the many imports, exports and reports and in recording the user interactions.

In the fifth step, the migration testing is executed with the data acquired in the fourth step. Since there are no detailed test cases as there are when requirements are available, the test case is usually an online transaction or a batch process. The amount of input data that goes into such a test case can be great, so it could be difficult to specify. The same applies to the outputs of regression test cases. As a consequence, the migration test has to be automated. Test execution in a migration project is mainly about handling tools.

In the sixth step, the results of the migration tests are evaluated to determine if the migrated system is performing as expected, that is, exactly as the previous system was. This is determined by comparing the results of the new system with those of the old system. Any deviation is a sign of non-compliance.

In the seventh and final step, the test coverage is measured to determine whether or not the code has been adequately covered. This will depend on the goals set in the test plan. If so, the test can be terminated, if not the test has to be repeated until the goals have been met. The test goals, e.g. transaction, code and data coverage, are the basis of the test service agreement – TSA.

Code Measurement

To quote Tom DeMarco, you cannot plan what you cannot measure 2. Therefore, to plan the test of a migration, you must begin by measuring that what is being migrated, namely the code. It is the code which is being transformed into another form. Should the data have to be migrated as well, then the data structures will also have to be measured.

The code provides several areas that can be measured: complexity of control flow, data usage, module interactions, reusability, maintainability, conformance, or other quantities. Which of these aspects are subjected to measurement depends on the desired information and target variable that one wants to gain with the measurement procedure. This means, of course, that we are dealing here, for example, with the number of code units or elements, database tables and data interfaces. Because we need to consider these in the test to meet the functional requirements. The desired metrics can be obtained from the source code, database schemas, user interface definitions, and system interfaces using static analysis. The measurement results then indicate how many data interfaces with how many database tables are needed to test each defined component. Here a static analysis tool can support, which calculates the testability of the system on a rational scale from 0 to 1. 3.

Normally, the code measurement requires no longer than a week, as experienced in past projects, provided one has the appropriate measurement tools. Fortunately, the authors have tools which cover both, old and new languages. In most migration projects, the previous system is coded in an old language like PL/I, COBOL or C.

Planning Migration Testing

Planning migration testing entails not only setting the test goals and allocating the test resources, but also estimating the time and effort required to make the migration test. Test effort here is determined by the number of test cases that have to be tested plus the number of test objects that have to be validated. A test case can be an online transaction, an event or a batch process. They are derived from the production usage profile. A test object can be a user interface, a file, a database table, a system interface or a report. They are taken from the production data. Together they add up to the test volume. It is necessary to divide this volume by the test productivity measured in previous projects to come up with the raw effort required. This can be adjusted by the product type, the product testability ratio, the project influence factors and the test repetition factor4.

The result of test planning is a test plan according to the ANSI/IEEE standard 829 plus a test effort and a test time estimation. The two results are the basis for a price offer to the user to test his migrated system. In the offer, particular emphasis is placed on the test goals and the test end criteria. They must be met in order for the customer to accept the test. For that reason they must be stated in an easily measurable form such as the ratio of the test cases executed and the test objects validated. The final version of the test plan can be a standard Wword document with the IEEE-829 table of content 5.

Migration Testing - Design

To design migration testing, one must consider what and how it will be tested. What have has to be tested is described with the test cases and the test objects. The testers have to analyze the current production processes in order to obtain them. Every relevant variant of every existing transaction is a potential test case.

Unfortunately, it is not possible to rely on a legacy system’s documentation, since it is hardly ever up to date. In many cases there is none at all. The best way to construct the test is for the tester to sit down together with the end users and record how they are actually using the current system. This amounts to a post documentation of the operational profile and accounts for the biggest part of the regression test effort.

The test objects are easier to identify, since they can be observed. They are also recorded by the measurement activity. What is important is the number of data items – fields, columns, tags, widgets, etc. – that each data object has and their representative value ranges, as taken from the production data.

The how of migration test design is concerned with how the test objects are to be acquired and validated, and how the test cases are to be executed. The test design must foresee where the test objects are taken from and by what means the test cases can be recorded. The test design itself should be in a structured, semi- formal format. E.g.For example, Excel tables, graphic tools and XML-documents can be used.

Migration Testing - Acquiring Test Data

As opposed to testing a development project, one does not have to generate data to test a migration project. The test data can and should be taken from production. The question here is what data to take. It is too much to copy the whole production environment. In the test design those transactions, events and batch runs are identified which are representatives of the others. These must be executed in a controlled environment using the existing system. Prior to each test, the images of the user panels, the input files and the database contents are archived. After the test the new images of the same objects plus any additional objects such as output messages sent and reports printed are recorded in the same way. This will lead to a huge mass of data which has to be managed. In previous projects, often a special server was required just to archive all these test data.

Here, on the one hand, test tools can be used as useful helpers:

- to record user interfaces

- to be able to manage the many old and new data images that need to be kept.

On the other hand, it is also a good idea to use a capture replay tool. If none was available in the past, the authors have made do with simply capturing the user interface of the production environment via a screenshot and using this as a specification for testing the migrated system.

It is here that test tools can be very helpful, a) in recording the user interfaces and b) in administering the many old and new images that have to be kept. It is here, where a capture/replay tool can be helpful. Where one was not available, the authors helped itself themselves in the past by recording the production user interface images with a simple screen shot and using this as a specification for testing the migrated system. Whether with or without tool support, the problem of data acquisition for migration testing is mainly that of administering huge volumes of recorded data. Anyone planning to test a migrated system of any size must be prepared to handle this 6.

Migration Testing - Execution

What is unique about migration projects is the amount of testing relative to the other activities. When systems are developed, they are being tested module by module, component by component and system by system over a long period of time. Each module has to be designed, coded and tested, and these activities are highly intertwined, so it is not so obvious how much testing is going on. Only the system test at the end stands out, as a purely testing activity.

Not so with a migration project. In a migration project whole systems have to be tested at one time, and since most, if not at all, of the code transformation is done automatically by persons totally unfamiliar with the functionality of the system, there is no way to know where errors will occur. If something is transformed wrong, they most likely will not even notice it. The whole burden of demonstrating correctness and locating transformation errors is placed on the testers. Thus, from 60-80% of a migration project effort is devoted to testing. If the test is not automated, it will be impossible to complete it in any reasonable time.

Before starting migration testing, the migrated code should be instrumented by placing probes in every branch or at least in every method or code block. This is done to be able to determine afterwards what portion of the code has been tested. There are automated tools for doing this. Then the test can begin. The migrated system is bombarded with the data of the old transactions, one after the other. Even if a transaction fails, the next one is started. A test driver must be able to simulate the human user. After every transaction or batch process, the contents of the output panels, the output messages and the new images of the affected data base tables are recorded for later evaluation. It takes too much time to validate them during the test. If there is no automat to save the output screen contents, they should at least be saved manually as a screen shot and stored for later comparison. The emphasis here is on testing as much as possible without interruption. If anomalies occur, they are recorded to be checked out later. There is not much place for creative testing in a migration test 7.

Migration Testing - Evaluation

Only after the test execution is finished, the results of the test should be validated. For validation, the new images of the migration test are automatically compared with the images retained from the test of the old system. The new screen contents, interface data and database tables are matched one at a time with the corresponding old ones. If they are in a different format, then they have to be converted to a common one for comparison.

For this purpose, one of the authors has developed several conversion and comparison tools. The user interfaces, which may earlier have been mainframe maps or UNIX screens, are transformed into XML documents. The newer user interfaces, which may be web pages or mashups, are also converted into similar XML documents. In this way individual data elements can be selectively compared with one another, regardless of where their position on the screen is and how they are displayed.

The same is done with the databases. The contents of the old databases, whether they be IMS, IDMS, IDS, ADABAS, DB2, VSAM or sequential files, are converted to comma- separated value files. The contents of the new relational databases are also downloaded to the same CSV format. Then the two CSV files are compared with each other on a column by column basis. It is not necessary to compare all the columns, and different formats or calculated values can be checked via rules and assertions.

A problem comes up in validating reports and system interfaces. The new reports or system interfaces may be in a totally different format thaen the old ones. Of course, the values of the individual data items should be identical. If the old billing amount was 19.99, then the new billing amount should be exactly the same. However, it could be at a quite different location on the print- out or screen.

To solve this problem, tools can be used, which extract individual data items from an arbitrary print- out, screen or text file and places them in a XML document. The names and types of the data items are extracted from the original data description in the screen language. The basis of comparison is a XSD schema. In this way data from an earlier mainframe listing can be compared with data now in XML or WSDL format.

The data comparison at the elementary item level reveals even minor differences, such as having a decimal point at the wrong position. When validating several million data outputs, such an automated comparison is the best way to recognize errors in the integrated code. The other way is to compare the execution paths through the code, but that requires tracing the tested transactions. There are also tools for this purpose, e.g. TestDoc, which not only records the paths through the code but also the times when the methods or procedures are executed 8.

Migration Testing - Measurement

Monitoring test execution is an important prerequisite to for measuring the degree of code coverage. Since it is the code and not the functionality which is being converted, it is the code which has to be covered. Before test execution, probes or trace points were inserted automatically into the code. During the test, these probes record if and when they are traversed. This execution information is kept in a log file. After the test, the log files are processed to determine how effective the test really was.

In one report, test coverage is documented as the percentage of probes traversed. This is compared with the test coverage goal set in the test service agreement. In another report the path of each test transaction is documented. This indicates which code units are executed in what order. These execution paths may be compared with those of the original system to determine where the system went wrong. In a third report the code units impacted by a given transaction are recorded. If a fix should be made to a particular code unit – method or procedure – then this report tells you which test cases have to be repeated.

In testing a migrated system different types of errors will occur than in testing a newly developed system. In a new system functions are omitted, data types wrongly defined, business rules falsely interpreted, results calculated false incorrectly, and so on. These errors are usually obvious and easy to identify. In testing a migrated system, the errors are much more subtile. Decimal places are lost, dates are distorted, data displacements are off by one and conditions are inverted. The results appear to be correct, but they are not. The only way to identify such errors is by a comparison of all data in connection with a complete coverage of all code. Since it can never be known which statement was converted wrongly, every statement has to be tested. This requires the test to be repeated many times 9.

Besides measuring test coverage, it is also necessary to measure the correctness of the output data and the percentage of faults found relative to the faults predicted. This information is used to determine when to stop testing. The planned goals are anchored in the test agreement.

Summary

Migration testing requires a different approach than that of testing a newly developed one. It is much more of a mass production. An enormous amount of converted code and data has to be tested blindly in a limited amount of time. To reach the goal of demonstrating the functional equivalence, nearly all of the converted code units have to be tested with a large data sample taken from the ongoing production. The huge amount of data involved and the many transactions that have to be repeated place high demands on test automation.

The experience of the authors is that migration testing demands highly skilled testers with highly sophisticated testing tools. It is not a so much a question of the number of testers as it is a question of the degree of test automation. Equally important is the test organization. Such a large- scale migration test has to be meticulously planned down to the lowest detail. That requires experienced test managers, which few user organizations have. But Therefore, an independent, specialized test team is necessary for a successful migration project.

- Onoma,A./Tsai,W.-T./ Suganuma, H.: "Regression Testing in an Industrial Environment", Comm. Of ACM, Vol. 41, No. 5, May 1998, p. 81.

- DeMarco, T.: Controlling Software Projects - Management, Measurement & Estimation, Yourdon Press, New York, 1982

- Criag, R., Jaskiel, S.: Systematic Software Testing, Artech House Pub., Norwood, MA. , 2002

- Koomen, T., von der Alst, Leo, Broekman, B., Vroon, M.: TMap Next for result-driven Testing, UTN Publishers, Hertogenbosch, NL, 2007

- IEEE: ANSI/IEEE Standard 829 - Standard for Software Test Documentation, ANSI/IEEE Standard 829-1998, Computer Society Press, New York, 1998

- Fewster, M./Graham, D.: Software Test Automation, Addison-Wesley, Harlow, G.B., 1999

- Black, R.: Pragmatic Software Testing, Wiley Publishing, Indianapolis, 2007

- Sneed, H./Baumgartner, M./Seidl,R.: "Der system testing", Hanser Verlag, Munich-Vienna, 2008

- Kann, S.H.: Metrics and Models in Software Quality Engineering, Addison-Wesley, Boston, 2001