Test automation aims to increase the efficiency of any test activity. Already during test design, there are different types of test automation that follow different approaches:

- Capture & Replay

- Scripted

- Data-driven

- Keyword Driven

- Model-based

With each stage, the demand on the test design also increases. Techniques such as capture & replay provide faster results, but keyword or model-based techniques provide durable and scalable solutions.

The special consideration of the test design in the context of automated test execution has its cause in the more or less frequent changes to which software development is subject. These can be caused by changed requirements or discovered errors and lead to the fact that the manual maintenance efforts for automatically executable test scripts easily cancel out the advantage of automated test execution in the case of frequent changes.

Capture & Replay

Capture & Replay" refers to the approach of recording the manual execution of a test case (capture) and then being able to repeat this recording as often as desired (replay). This requires test tools that can both record the actions of the tester and then reproduce them again at the interface of the test object. This demand on the test tool increases with the complexity of the test object and can, in extreme cases, lead to the use of test robots that, for example, add, change or remove hardware components. In the simplest and most common case, this technique is used for testing via operation and observations in a web browser. For recording, the test tool only needs to store the actions of the mouse and keyboard on the interface, as well as the position and content of the expected reactions.

Two possibilities for this are to save the absolute coordinates of all mouse clicks or the object IDs (if available) of the clicked elements. The latter has the advantage that rearranging the UI elements does not directly require the test cases to be recorded again. One of the best known representatives for "capture & replay" tests of web applications is Selenium, which allows recording e.g. with the corresponding plugin for the Firefox browser and allows replaying these recordings on many of the most widely used browsers.

Selenium IDE as Firefox Add-on

In the middle right section you can see the sequence of test steps: in the first step the base URL is opened. In the upper section, the website to be selected as the starting point for the search for the testmeisterei is stored. In the second step the search field is activated and the value "testmeisterei" is entered. In the next step the search is started and waits until the browser has loaded the following website ("clickAndWait"). In the following step the first entry is called. In the last step the content of the web page is checked by comparing the headline with the string "Quality is an attitude". As the interface indicates, the test went without revealing an error. The correct page was found.

Selenium is a very powerful automation tool. It can also be used to implement the higher design levels. This is even the rule.

The great advantage of "Capture & Replay" is that subject matter experts can directly create and execute test cases without the support of test or software experts. This is possible without much preparation or training time. The main disadvantage is the comparatively high adaptation effort: Every time the system under test is changed, the test case has to be re-recorded: for changes in content, for changes in the order of operation or for minimal changes in the page layout (only for the variant based on absolute coordinates). Even with comparatively small changes, the majority of the test cases must therefore be created again.

Since test case creation consists of recording manually executed test cases, each test case must therefore be manually executed again. A single change does involve low to medium effort (depending on the complexity of the system). However, due to the high frequency of adjustments measured by changes to the test object, the test design costs are very high. Therefore, this method is mainly suitable for use when one wants to demonstrate results quickly, when changes to the system are the exception rather than the rule, or merely as a supplement to the approaches listed later.

Script-based test automation

In script-based test automation, test cases are designed in the form of executable scripts (executable test code). The test designer must therefore have programming skills and have a development environment available. Such test scripts can be designed in any programming language. At the unit test level, the use of xUnit tests is often observed, i.e. unit tests written directly in the x language in which the program under test is also written: So for Java-based programs jUnit, for program in C++ cppUnit, etc. At the system test level, the choice of programming language is most likely to depend on the test interface of the system under test.

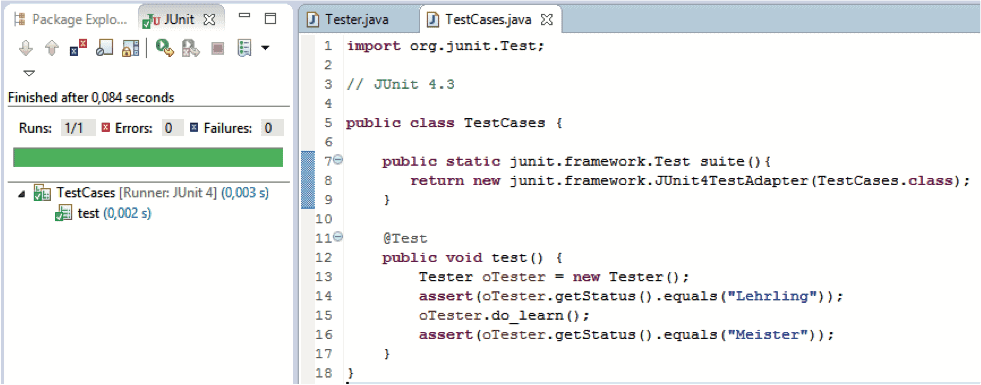

JUnit in the Eclise development environment.

Example of the Eclipse development environment with JUnit for a test case. In this test case, the behavior of the class Tester is tested. This has a method getStatus(), which returns a string depending on the internal state. The class describes a tester that has the state "apprentice" after initialization and the state "master" after intensive learning by calling the method do_learn(). In the left area of the figure, the interface indicates that the test was executed without detecting an error.

The advantage of script-based test design over capture & replay is primarily the independence of the test case definition from the test execution. Changes to the test design are therefore possible without re-executing the test. This is especially advantageous for more extensive tests. However, the main disadvantages of script-based test design are similar to those of capture & replay: in comparatively many cases of changes, test cases have to be adapted manually. However, the frequency is not quite as high because, for example, changes such as moving UI elements can be incorporated into the test design comparatively easily by using programming tools. This would be possible via the abstraction of the concretely requested positions of UI elements into separate classes.

The main advantage is that the need for change does not necessarily entail re-execution of the entire test case. Now you can weigh up whether the manual adaptation of the test code without test execution is more time-consuming than the manual execution of a test case and the automatic logging as in the capture & replay approach. This depends in detail on the specific challenge. However, our experience shows that with increasing test duration or for time-critical tests, the effort for manual execution is significantly higher than the effort for adapting the test script. Furthermore, there may be additional aspects, such as the creation and use of screenshots in test cases or data to be elaborately created during test execution, which make the adaptation of the test script significantly easier than the execution of the test.

Also not to be neglected is the error-proneness during manual test execution. Since every error is automatically logged and an operating error also leads to an unwanted change of state, the test would have to be restarted from the beginning, which further increases the effort for capture & replay. Overall, in our view, the advantages of the script-based approach outweigh those of Capture & Replay.

Data-driven test automation

In contrast to the two previous approaches, data-driven testing focuses on the data. The basis is a test design that abstracts from concrete data, e.g. by specifying only the expected parameters, but not their values. The tester now has the task of defining suitable data. This data can be defined in any format (matching the framework used). In practice, Excel spreadsheets or SQL databases are often common. One way to design a test would be to provide a table that provides one column per required input parameter and one column per expected output parameter. The tester must now enter concrete values in the rows of the table. An adapter establishes the link with the test object, passes the input parameters and compares actual results with the predicted results.

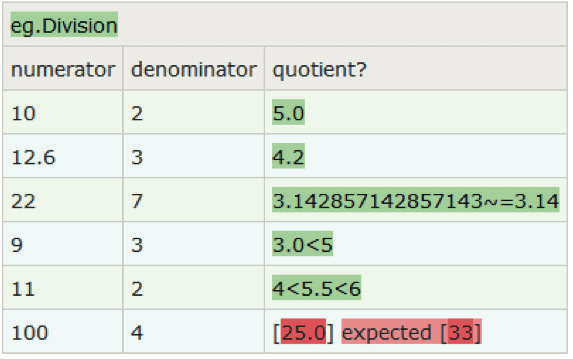

Test of the division with FitNesse

A typical example is the FitNesse test tool. FitNesse is a wiki web server that can be installed locally on the PC. This means that the data can be created directly as a table on a separate test wiki page. Alternatively, the values can also be imported from an Excel spreadsheet in edit mode using the "Spreadsheet to FitNesse" button. The top line indicates the name of the class to be tested, in our case Division. The defined table is passed by FitNesse to a test execution framework (e.g. Slim), which passes the values listed in the second line to setter methods of the same name in the Division class or retrieves the result for names with a final question mark from the Division class. Concrete values or value ranges can be specified for expected values. The test execution can be started by pressing the "Test" button. Depending on whether the actual result corresponds to the expected result or not, the corresponding line is colored green or red by FitNesse.

Keyword-driven test automation

The two previously described approaches are based on programming languages and were focused on either behavior or data. In the following, the focus is on increasing maintainability by using additional levels of abstraction.

In keyword-driven test design, abstract keywords are used instead of concrete commands, which are replaced by concrete commands at runtime depending on the configuration in an adaptation layer. Changes in system behavior that do not require a change in the scope of the language can thus be transferred to the corresponding test cases with comparatively little effort by swapping the keywords. Especially the improved readability is often perceived as a significant advantage. If new scenarios arise, new test cases must be written for them. New possible system actions or reactions entail the extension of the set of keywords. There are numerous tools that support keyword-driven testing. Any programmer can create an appropriate framework on their own. More powerful tools with editor support e.g. for auto completion are xText or Cucumber. This allows the definition of the whole allowed language.

xText

Here is an example in xText that allows you to define testers and test cases and relate them by assigning a task to a tester regarding a test case: to design it or to execute it.

Instance of the xText language via tester

Here is an instance of this language. Here, the tester Klaus is defined as a test master with the tasks of designing and executing test case T1. This language is of course only exemplary. In the same way, operations and expected results of a test object could be related to each other and executable test cases could be derived from them.

The main advantage of this approach is the higher abstraction level, which allows the comparatively easy creation and simplified maintainability of the test cases. Furthermore, in many cases no extensive adaptation is necessary at all, since the test cases are converted into concrete commands in the adaptation layer anyway: Several changes, e.g. concerning the concrete interface format, which are added in the test cases of the adaptation layer, can thus be made comparatively low-effort and once for all test cases in the adaptation layer. The disadvantages include a medium level of complexity in the preparation, since both the language in which the keywords are to be defined and the tasks of the adaptation layer must be coordinated with all stakeholders.

Purely from the technical possibilities, these results can also be achieved with the script-based approach. However, this requires extensive preparation in the form of abstraction levels to be created, such as wrapper classes or libraries for translating keywords into sequences of actions. Furthermore, discipline of the testers is necessary in order not to leave the higher abstraction level for solutions that are needed quickly and to create test scripts that are difficult to maintain. The modeling frameworks used, on the other hand, force the testers to remain at the modeling level and thus ensure permanent, improved maintainability. Furthermore, frameworks such as xText offer the possibility of generating convenient editors from the language description alone.

Model-based test automation

Model-based test design uses models as the basis for test design. Models are abstract descriptions of arbitrary artifacts to be used for test design in our case. They can define the system behavior or they can define the test behavior. They can be a separate test model or part of a joint test and development model. They can be (non-)deterministic, timeless or timed, discrete, continuous, or a mixture of these. They may describe data flow, control flow, or a mixture thereof. Behavior can be continuous or event-driven. As a first consequence, this results in a wide variety of uses of all possible types of models for test design.

Models can be used, for example, not only to describe individual test cases at an abstract level, but also to describe entire sets of test cases. This is achieved by defining branches, conditional statements, reactions to external events, and much more already at the model level. A model can be used to get a holistic overview of the entire system and the interdependencies between components. This helps significantly in earlier phases of system development, e.g. during the review of requirements or architecture drafts. But of course, all test-relevant information is also bundled here and the test cases are derived from their totality.

If the derivation of the test cases from the model is done automatically, there are further advantages: Minor changes in the model, but which relate to a large number of test cases, can be automatically transferred to the test cases. The test generator often proceeds in such a way that certain quality targets, such as the achievement of a certain coverage at model level, are selected as the end criterion for the test design. This means that changes involving further test steps, a changed number of parameters or a change in the expected behavior can also be automatically adopted. The corresponding test cases are generated automatically.

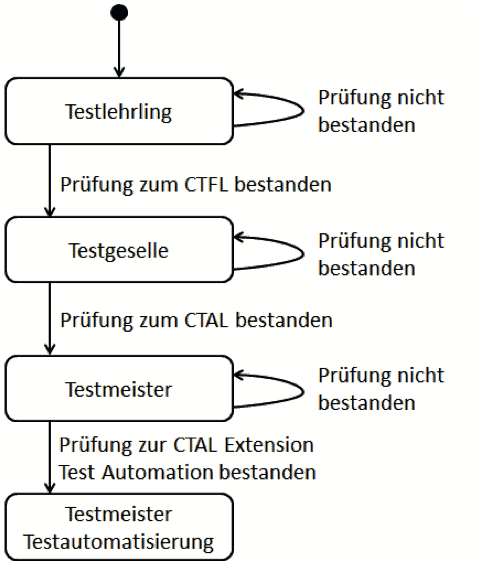

State machine for the career of testers

Here is an example of a state model. It shows examples of possible transitions in a tester's career from test apprentice to test master. Of course, it usually takes more than passing an exam to become a test master. The representation form is similar to those of the UML state machines. This state machine has each tester start in the "test apprentice" state. Depending on whether the apprentice passes the Certified Tester Foundation Level exam, he is then managed as a journeyman tester or can retake the exam. In this way, he can work his way up step by step to test master.

If one wanted to test an application that describes the possible career paths described here, there would be a direct test case - the good case, which implies that every exam is passed on the first try. If one includes the bad cases, then even this small example shows that an infinite number of paths are theoretically possible due to circular reasoning. During test generation, a test generator usually only orients itself to the structure of the model. If states are added, changed or removed in this model, it automatically includes them in a new automatic test design.

The main advantage over the keyword-driven approach is that the entire sequence, including possible branches, nesting, loops or parallel behavior, can now be described and used for the test design. The model is interpreted by a model-based test generator in such a way that individual control-flow-based flow sequences are generated by this model, which are subsequently converted into a previously defined target format. Common target formats include executable test code, the human-readable variant of this, e.g. for validation, and test documentation. Accordingly, one of the advantages is that it is possible to react to any kind of change in the model level or the exports of the associated test generator. The resulting test cases are then generated automatically. The high risk of manually adapting a large number of test cases with the associated effort as with the previously described variants is no longer present here. This results in a strong increase in test efficiency. One of the disadvantages is the high preparation effort.

Comparison of test automation types

A qualitative comparison based on our experience shows the following cost structure:

Capture & Replay | Scripted | Data-driven | Keyword-Driven | Model-based | |

|---|---|---|---|---|---|

Initial costs | very low | low | low | medium | high |

Recurring costs | particularly high | medium | medium | low | very low |

This already shows that higher levels of test automation save costs in the long term, while lower levels are better suited for a quick assessment. In general, the general conditions still need to be considered when making a choice: Which project procedure is used? Who creates the test cases? How much capacity is available?

You can find more hints for choosing the right strategy in my book Basiswissen Testautomatisierung.