As part of an agile development team, testers have a special responsibility. Among other things, they must prevent technical debt from getting out of hand.

technical debt

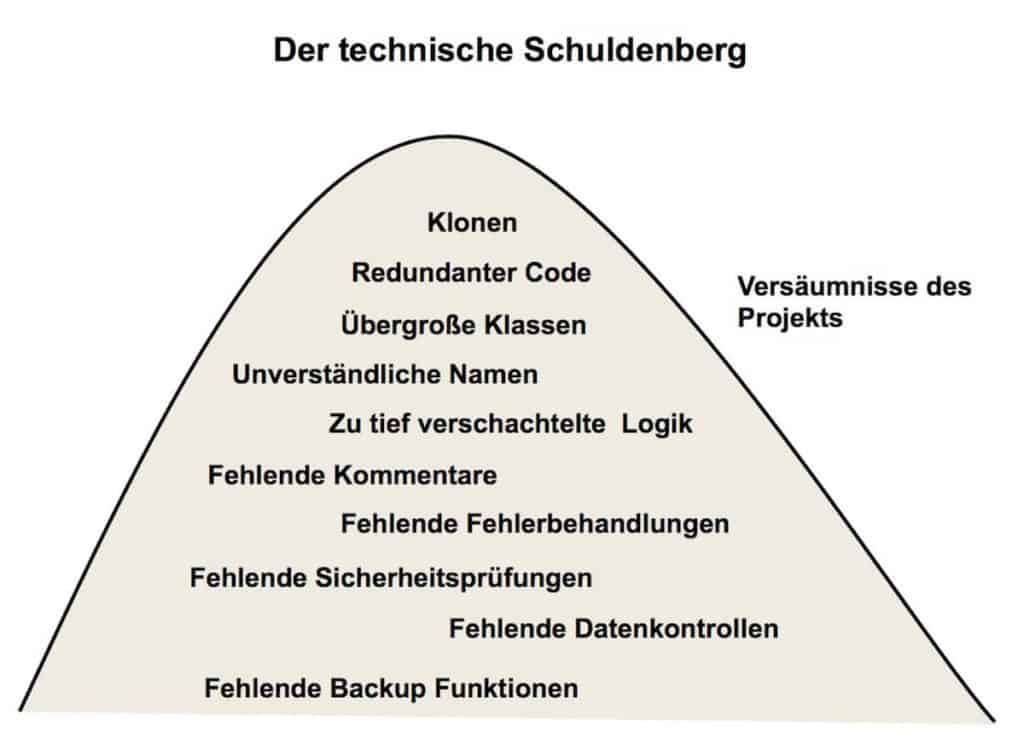

"Technical debt" is a term used to describe inadequate software. It is intended to express the problem of inadequate software quality in business categories 1. It is intended to show managers that failures in software development have negative consequences that cost them later. The term debt is a reminder that you will have to pay it off at some point. The amount of software debt of a project is measurable. It can be expressed as an absolute cost number or relative to the development cost of the project in question. The term was coined by Ward Cunningham at the OOPSLA meeting in 1992. "Technical Dept" in Cunningham's original sense is "all the not quite right code which we postpone making it right." 2.

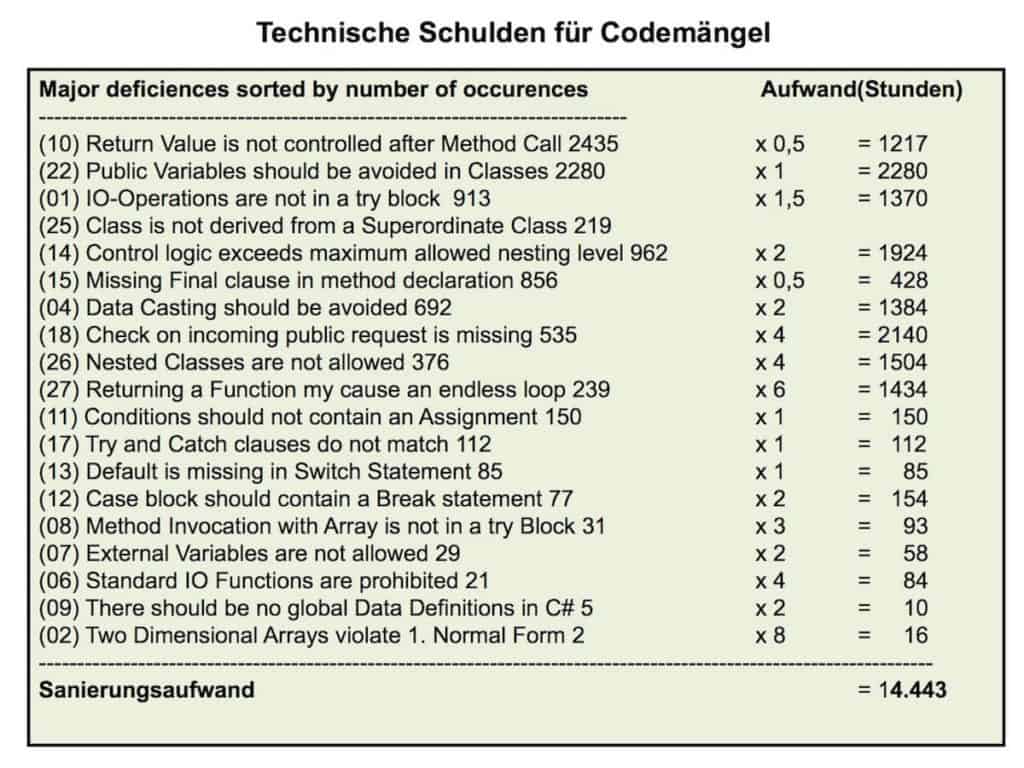

A formula for calculating debt has been proposed by employees of CAST Software Limited in Texas. This formula links problem types to effort and effort to money. The basic formula is a database of experience from numerous projects that shows the average effort required to eliminate software defects 3. For example, refactoring a method may cost half a day. At an hourly rate of US$70, that's $280. Adding a missing exception handler might cost only an hour, but implementing required security checks in a component could take several days. To the Texans' credit, they have identified, classified, and assigned effort numbers to a number of deficiency types.

Examples of the types of defects are:

- built-in SQL queries

- empty catch blocks

- too complex conditions

- missing comments

- redundant code blocks

- inconsistent naming

- Grinding without emergency brake

- too many nested codes

The debt for such code deficiencies is the number of deficiency incidents multiplied by the hours of correction times the hourly cost.

In addition to these statically identifiable defects, there are also the calculated errors that occasionally occur during testing. Due to time constraints, not all errors are eliminated in a release, as long as they do not affect the runnability of the software. These include errors in the outputs, e.g. incorrectly calculated amounts and shifted texts, as well as errors in the input check. There are also performance problems such as excessively long response times and time-out interruptions. Users can live with such deficiencies temporarily, but at some point they become a nuisance, and by the time they are finally released, they need to be fixed. The cost of fixing them counts as project debt, which can be calculated based on effort. Bill Curtis estimates the median debt for agile projects to be $3.61 per statement 4. This is the absolute measure of debt. It is also possible to measure technical debt relative to the cost of development.

Timely problem detection to avoid technical depts

One advantage of agile teams is constant feedback. That's why testers are on the team. When it comes to preventing quality degradation, testers on an agile team have more to do than just test. They ensure the quality of the product on the spot during its creation. They do this through a series of timely checks and balances. These include reviews of user stories, testing of code, acceptance of unit test results, and an ongoing integration testing 5.

Reviewing stories is about analyzing the story texts, adding to them and, if necessary, touching them up. Often the product owner will overlook something or explain it inadequately. The testers should draw his attention to this and, together with him, make up for the missing points and clarify the insufficient passages.

When reviewing the code, testers can evaluate conformance to coding rules, compliance with architectural guidelines, and code design. Many failures such as missing security checks and lack of error handling can only be detected in the code. Automated code analysis is a good way to implement this rapid feedback. When accepting unit test results, testers have to make sure that there are enough and good quality test cases and achieve sufficient module test coverage. It is not necessarily their job to do the unit testing themselves, although there are agile projects where this is done.

Agile testers should strive to always be able to provide feedback to developers quickly 6. "Continuous Integration" makes this possible 7. The tester has an integration test framework into which he puts the new components. The previous components are already there. They are supplemented daily with the new ones. Of course, test automation plays a crucial role here. With the test tools, it is possible to repeat the regression test daily, while also running the functional test of the newest components. Problems that occur can then be reported back to the developers immediately. This is the decisive advantage over conventional, bureaucratic quality assurance, where it often takes weeks before error messages and defect reports could be reported back to the developers. This meant that valuable developer hours were lost.

In agile development, this is no longer the case. There is no such idle time anymore. For this, testers have to constantly run after the developers to maintain the same pace as the developers themselves. As a result, testers must be well versed in the development environment, have powerful tools, and have a good rapport with developers. If these three conditions are not met, then testers will not be able to deliver the value expected of them, no matter how well they know the testing technology. Agile testing simply demands more from testers than has been the case in the past.

Timely detection of problems and quick feedback to developers are the main benefits of agile testing 8. They need to be ensured. They are also the reason why testers should be with developers. Whether they really need to be physically present is another question. This is where opinions differ 9.

What's "done"?

At Belgium Testing Days in 2012, Johanna Rothman and Lisa Crispin addressed this issue. The question is, what is "done". According to Johanna Rothman, this is a question that the whole team needs to answer. However, the testers are supposed to initiate and drive the discussion. They should also feed the discussion with arguments for better quality. Rothman claims "you have to get the team thinking about what is done. Does it mean partially done, as in it is ready for testing or fully done, as in it is ready for release?" A certain level of quality is required to release a temporary release. A completely different quality level is required to declare the product finally ready. There is a long way between these two states. Testers must ensure that development continues until the target state is reached. They must convince the product owner that this is necessary. Otherwise, you're just deferring problems to maintenance, as used to be the case with conventional development projects. Rothman therefore suggests using Kanban progress boards to show the relative quality status of individual components. This allows everyone to see how far components are from the desired quality level. Actually, the team needs two progress boards, one for the feature state and one for the quality state.

The functional status of a software product is easier to assess than the qualitative status. It is visible whether a function is available or not. The quality status is not so easily visible. How many bugs are still in the software, you can only know when you have tested all functions of the software. You can't judge how good the code is until you've analyzed the code in detail, and you can't judge how good the overall system is until you've used it for a while. The best indicators of software quality are the number of defects found so far relative to functional test coverage, and the number of code defects relative to the number of code statements tested. There should be target values for both measures, which the testers propose and agree with the other team members. In this way, the position of the individual component on the Kanban board can be fixed and the distance of the actual state from the target state can be seen by everyone in the team.

Lisa Crispin points out that software quality is the ultimate measure of agile development 10. Functional progress should not be gained at the expense of software quality. After each release - every 2 to 4 weeks - quality should be measured again. If it is not adequate, it can be touched up during the next release alongside functional advancement. If it is overly poor, the next release must be a revision release, where the bugs are removed and the software is refactored. Crispin even acknowledges a separate quality assurance team that works alongside the development team to track and report back to the development team on the quality of the software being created. This would bring back the old separation between development and testing.

Johanna Rothman says testers need to have a say in what "done" means, right from the start of the project. "To be done also means that the quality criteria set by the team are met". The consequence of this is that these criteria are accepted and practiced by everyone involved. Everyone in the team must be aware of their responsibility for quality and do their part. "Everybody in the team needs to take responsibility for quality and for keeping technical debt at a manageable level. The whole team has to make a meaningful commitment to quality." While software quality is a matter for the team as a whole, the testers on the team have a special responsibility. They must ensure that technical debt is contained and reduced.

- Kruchten, P./Nord, R.: "Technical Debt - from Metaphor to Theory and Practice". IEEE Software, Dec. 2012, p. 18

- Cunningham, W.: "The WyCash Portfolio Management System" Proc. of ACM Object-Oriented Programming Systems, Languages and Applications - DOPSLA, New Orleans, 1992, p.29.

- Curtis, B./ Sappidi, J./ Szynkarski, A.: "Estimating the Principle of an Application's Technical Debt", IEEE Software, Dec. 2012, p. 34.

- Wendehost, T. "Source code holds a cost trap" in Computerwoche, No. 10, 2013, p. 34

- Janzen, D., Hossein, S.: "Test-Driven Development - Concepts, Taxonomy and Future Direction", IEEE Computer, Sept. 2005, p. 43.

- Bloch, U.: "When integration fails to keep pace with agility", Computerwoche, No. 24, June 2011, p. 22

- Duvall, P./Matyas, S./Glover, A.: Continuous Integration - Improving Software Quality and reducing Risk, Addison-Wesley, Reading Ma., 2007

- Cockburn, A.: Agile Software Development, Addison-Wesley, Reading, Ma., 2002.

- Bavani, R.: "Distributed Agile Testing and Technical Debt", IEEE Software, Dec. 2012, p. 28.

- Crispin, L. / Gregory, J.: Agile Testing - A practical Guide for Testers and agile Teams, Addison-Wesley-Longman, Amsterdam, 2009