Modern IT systems are never and always ready

Digitalization requires continuous development, testing and delivery of the deployed software application systems. These three activities are no longer to be carried out separately, but united. The users are involved in the development.

DevOps is an answer to the demand for continuous development. Modern IT systems are in principle never finished. They have only ever reached a state where part of the user requirements are satisfied. But both the requirements and the framework evolve and change. The challenge for DevOps is to configure the systems in such a way that, on the one hand, they can be constantly developed further, but on the other hand, they are always ready for use in a defined quality. Users must come to terms with the fact that the system is constantly changing, but must also be able to rely on what has been achieved while development continues 1.

Digitalization and DevOps

Digitalization is a very abstract term for the automation of all areas of human life. Formally, it states, "The term Digitalization generally refers to the changes in processes, objects, and events that occur when digital devices are increasingly used. In its original and narrower sense, this is the creation of digital representations of physical objects, events, or analog media." 2 The prerequisite for this is the collection of all data about the domains of human life in digital format, that is, as bits and bytes. In analog data processing, data is interpreted as changing flows or changes of state. In the Digitalization , states are recorded as bit patterns. They can be stored and reprocessed. Digitalization gives us the ability to automate all operational applications and thus reshape them as we see fit. The problem with this is that automating an application is not a one-time project. Systems change regardless of their level of automation, and when they are automated, they only change faster. Once you start automating an application, you have to keep at it. There's no going back. The expertise it takes to run a system manually will go down in the process. As promising as it may sound, Digitalization is a pact with the devil. Automation also means dependency. Goethe's Faust sends his regards.

Goals of the Digitalization

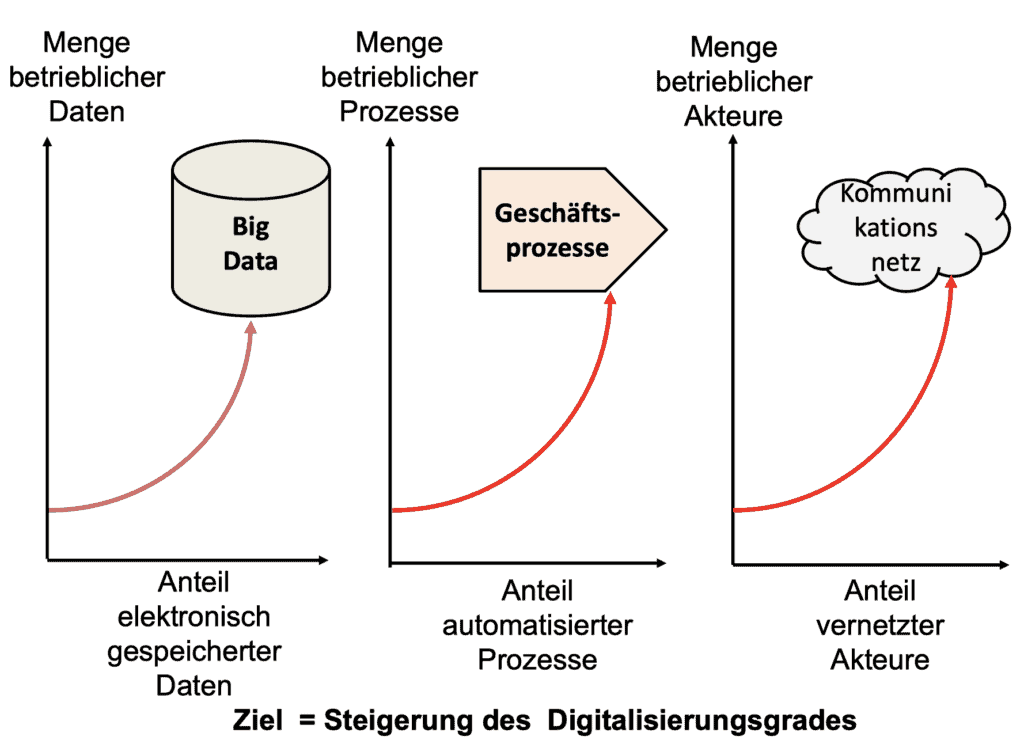

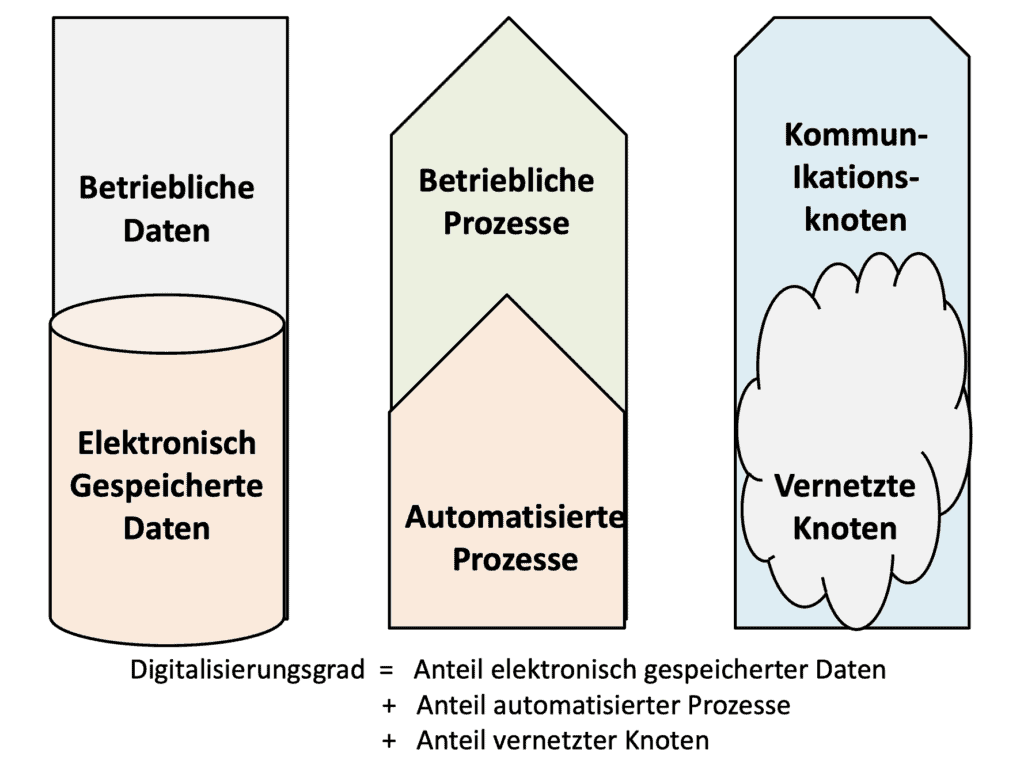

There are three main objectives in the Digitalization of an organization:

- the electronic storage of as much data as possible,

- the automation of as many processes as possible,

- the networking of as many system actors as possible.

Data is all the information that the system needs to perform its function, for example, customer data, employee data, order data, production data and storage data. Data can be on electronic storage media, on paper, or in the heads of employees. The goal of Digitalization is to have all data on electronic storage media because that is the only way to have it accessible to everyone and everything.

Processes are the operational procedures such as processing orders, planning and controlling production, warehousing, delivery and billing. Processes are executed by actors. The actors can be people or machines. In a semi-automated process, the actors are partly humans and partly machines. In a fully automated process, only machines are at work. Many processes can be fully automated. Others are only partially automated. One goal of Digitalization is to automate as much as possible.

In an organization, actors communicate with each other as well as with actors outside their own system. They are networked with each other. They can exchange data with each other via the exchange of papers, via personal conversations or via electronic messages. Devices as actors can also communicate with each other directly rather than through people. This is why it is referred to as the "Internet of Things (IoT)". A business is a network of communicating nodes. One goal of Digitalization is to have as many nodes connected as possible. So anyone can exchange data with anyone, and anyone can exchange data with anyone 3. Digitalization allows in principle to react faster to changes in the environment. Both the data and the operations on the data are recorded as bit patterns, and bit patterns can be easily overwritten. New bit patterns emerge in seconds, reshaping the meaning of the data and how it is processed. We thus have the ability to constantly change our work processes.

It all sounds so simple. We just need to capture the data electronically, program the processes and digitize the communication. But everything that is "digitized" must also be tested. Errors can occur, misinformation can be contained, or even worse, dangerous combinations of data? In this respect, nothing changes. Testing blocks the rapid implementation of requirements, especially when testing and repair are separate from the implementation of requirements. When a new law is passed, it used to take months for the law to be implemented. This is no longer justifiable in our fast-paced age. Testing and handover must be accelerated 4.

The way to the Digitalization

First, we need to understand exactly what is new about Digitalization . We have had electronic data processing for a long time, automated processes too, and broadband data transmission anyway. The fact that radio communication plays such a large role in our everyday lives is also not something that just happened yesterday. What can be new is the connection of all this. Ultimately, a company is digitized when all its data is stored electronically, when all work processes are fully automated, and when all employees are connected to each other and to the devices.

The question is whether it is really sensible to go that far, or whether we should not stop halfway. How far do we want to push it Digitalization ? Again, the test has current limits. There is only so much we can electronically store, automate, and remotely transmit than we can vouch for the content. The data we store electronically must not be corrupted. The questions we ask of the data management system must be answered correctly. The processes we initiate must be carried out in accordance with the prevailing regulations or the applicable business rules. And, the messages we send must not be altered or intercepted along the way. This means that everything we digitize must be tested and secured.

With Digitalization comes an enormous amount of testing. It starts with the test of the data and continues with the test of the processes and the test of the data transmission. This effort can only be managed if we proceed systematically and if the test itself is automated as far as possible. The degree of digitization can be understood as the proportion of electronically stored data relative to the sum of all operationally relevant data plus the proportion of all automated processes relative to the sum of all operational processes plus the proportion of networked system nodes relative to the sum of all system nodes.

It may be that this measure is not relevant from a business point of view, but as a goal of IT it is good to use for the time being 5. True Digitalization, if there really is such a thing, means drastic changes to existing products and business models, that is, new systems. Whether users are ready for this or have a choice is an open question.

Electronic storage of all operational data

The fact that we are physically capable of storing all data has not been disputed since the advent of cloud computing. Cloud providers offer us unlimited storage capacity. The question is whether we are also storing useful data and not just data garbage. The data must also be accurate. No invalid values should creep in. According to this, there would be two criteria for the data:

- they're useful,

- they are correct.

To determine if the data is useful, it must be tested with all operational applications, including query and reporting systems. This is done to check which data is used and which is not. The goal of this test is to have an inventory of all data used. Whether the applications themselves are of use is for the users to decide. To determine if the data is correct, it must be checked against the rules of correctness. There are two ways to do this. One is to compare the data against each other. New data can be checked against old data, or data in one application can be checked against data in another application. The data should have identical value ranges wherever it is used.

On the other hand, the actual values can be compared with the target values. The simplest type of this control is the visual inspection of the data by a specialist. However, this type of control is time-consuming and error-prone. The most elegant type of data control is the automatic value check against rules (assertions). The assertions specify which values the data concerned should have. They can be defined as value ranges, alternative values, calculated values, or relations to other values. The purpose is to identify and sort out invalid values.

However, this automated approach assumes that rules are specified for all data elements. Neither approach provides a 100% guarantee that the data is correct, but it does help to identify a large proportion of invalid data.

Despite acceleration of the release process, the quality of the product must not be sacrificed. Quality assurance of electronically stored data and automated processes is an indispensable prerequisite for operational Digitalization. This can only succeed if the users are involved. The users must accompany the release process and immediately accept the individual steps. This means that representatives of the end users must be involved from the very beginning. They are indispensable for ensuring quality. 6.

Automation of operational processes

Many processes within today's organizations are already automated, but in some cases with very old software systems - so-called legacy systems. Where the processes have a high degree of standardization, the software solutions are packaged as standard systems. This applies to a large part of the business applications. Likewise, more and more production systems, logistics systems and traffic control systems are covered by standard products. Off-the-shelf standard packages are the preferred solutions for process automation. They are less expensive, more reliable and easier to maintain. Above all, the supplier vouches for their quality and ensures their further development. Standard systems have already been tested several times and have a degree of reliability that individual solutions can rarely match. Nevertheless, many users still feel compelled to knit their own customized solutions. They can start on a greenfield site and build everything from scratch, or they can start with ready-made components and assemble them into a whole. They can still develop missing building blocks themselves. The important thing is that the new application systems consist of as many prefabricated and pretested components or services as possible. 7.

A service-oriented architecture with microservices is a promising way to achieve this goal. New developments are still associated with enormous costs and risks despite advances in software technology 8. The cost of testing automated processes is enormous. To be considered trustworthy, every possible flow path must be tested with all possible states. In short, it can take years to adequately test large, complex processes without automation. Test automation can speed up testing, but even automated testing has its limits. Humans still have to assign the test data, and only the subject matter expert can dictate the expected results. Once the test of a new application is proven, it can be automatically mutated and repeated, but it has to get that far first.

Therefore, testing remains the main obstacle to automating processes. The required software is quickly written or generated, but until it is confirmed as a complete and reliable solution in all facets, it can take a long time and cost a lot. As long as humans develop the software, it will be developed incorrectly. Faulty software makes it risky to automate operational processes. The risks of automated processes - like the risks of electronically stored data - are reduced by testing.

The quality of new software can also be determined by static analysis, but only dynamic testing brings out whether the automated process really behaves as it should. Anything that helps to provide this proof faster and cheaper is to be welcomed, but the fastest and cheapest solution remains the use of already proven software, either as a whole or as individual building blocks 9.

Networking of all network nodes

The third pillar of Digitalization , in addition to electronically stored data and automated processes, is the omnipresent exchange of data. Information obtained must be transmitted everywhere and at all times, from the sensors on the equipment, from the monitors that oversee those automated processes, from the data storage devices and from the people who record data. All elements of a system, the people as well as the machines, must be interconnected. There is human-to-human, human-to-machine and machine-to-machine communication - see also "Internet of Things".

However, the communication must work properly. Software can monitor itself, but to do so it must be able to send and receive messages. The exchange of digitized messages is the kit that holds a digital environment together. But it must be trouble-free and secure.

A secure communication is one where everything that is sent is received. What is sent must not be intercepted, transcribed or listened to en route. Messages that are transmitted must remain sealed. Only the intended recipient may open them. This applies to people as well as devices.

Increasingly, devices and software components are sending messages to each other. The sender of a message - whether human, hardware device or software service - must be certain that the data sent actually arrives at its destination unchanged, and the receiver must be certain that the data received has not been misused, manipulated or intercepted en route. This can only be guaranteed via a secure communication network. Therefore, data transmission must also be tested. We need test tools to generate and send messages, tools to receive and validate messages, and tools to track messages as they travel through the network. These tools need to detect when and where messages are intercepted. Communication testing ensures that data transmission is working properly.

The monitoring of data transmission plays a special role in the testing of digitized operations. This is because it is not only a matter of the correctness of message transmission, but also of its security. Interventions in the communication network must be simulated in order to test how the network reacts to them. Interception, redirection and unauthorized use of messages must be ruled out. The communication network can only be considered secure if all these simulated attacks are detected and rejected. This includes attempts by unauthorized persons to break into the network. Of course, all intrusion possibilities must be tested. The security of messaging is an indispensable precondition of Digitalization.

Here it becomes apparent how much effort is involved in testing communication. Anyone who is serious about Digitalization must be prepared to pay a high price for quality. Otherwise, he will always remain vulnerable.

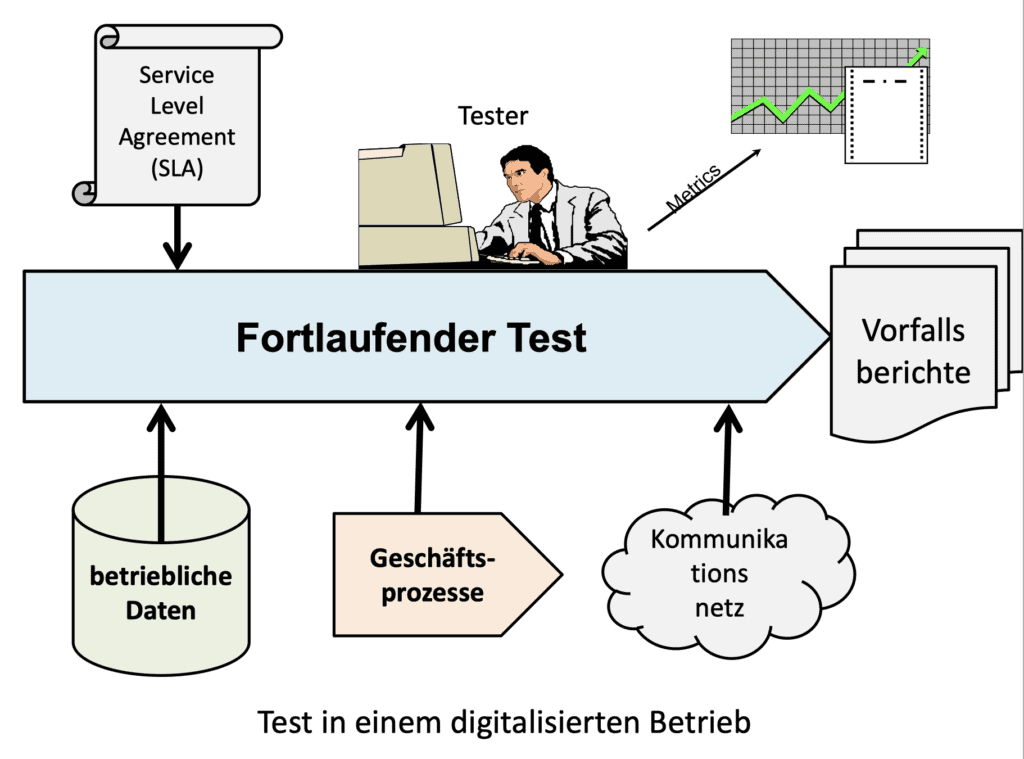

Digitalization requires the continuous test

To meet the challenge of Digitalization we need to see how we get on with testing.

Because there is so much to test in such a short time, test automation needs to be pushed. But that alone is not enough. Users must be involved in the test. They must not wait on the sidelines until the test is finally declared complete. In fact, the users are responsible for the test. After all, only the end users can decide if the test is sufficient for use. They bear the risk if something goes wrong. Of course, they are advised by experts, but only the end user can decide whether a product is ready for use.

The user is also responsible for checking the data. He must know what state the data is in and whether this state is sufficient for operation. This means that the data sets must be continuously monitored by automats and the slightest deviations from the target must be reported. The monitoring of the data takes place as long as the data is still being used. The same applies to the automated processes.

Since the processes are constantly being developed, they must also be constantly tested further. The test is never over. With every change, no matter how small, the software must be retested. Like the data state, the process flows must be permanently monitored and any deviation from the target behavior must be registered. Finally, all communication processes must be tracked and all messages checked. Messages that deviate from the norm are to be sorted out for closer examination. The content of all messages shall be checked against a specification of those messages and the deviation reported.

Digitalization requires products instead of projects

Anyone who dares to enter the digitization arena must say goodbye to project thinking. Projects are one-off activities, limited in time and cost, with a beginning and an end. 10. In a digitized world, there should no longer be any time-limited projects, only products, i.e. systems, which are continuously developed, tested and delivered.

Testing takes place as part of the operational handover. Until now, there was always a separation between development, testing and acceptance. There were walls between the activities. In DevOps, the activities flow into each other.

Explorative testing and using can hardly be separated from each other. The end user is both tester and user. When errors occur, he tries to work around them. They are reported in any case, but they must not stop the system from going into operation, as long as they do not prevent further operation.

DevOps promises to be the solution

For this problem, DevOps promises to be the solution. As more and more software systems have a dynamic goal in mind, they must never be considered a finished product. They remain in development for life. They only ever cover a subset of the requirements, a subset that gets smaller and smaller over time. Initially, the system might cover over 90 percent of digitization requirements, but over time, that percentage will drop to 80 percent and continue downward. Software systems have always depreciated over time. Belady and Lehman recognized this phenomenon as early as the 1970s and captured it in their laws of software evolution 11.

A software system is a representation of a certain application, for example, travel booking. It becomes obsolete to the extent that it fails to keep pace with that application, that is, the travel provider wants to offer more and more services that are not covered by the software. The software falls further and further behind and is of less and less value to the user of the system.

At the time Belady and Lehman promulgated their laws of software evolution, the rate of change was still manageable with conventional change management. New requirements remained below 5 percent of total requirements. For the requirements that were not covered or inadequately covered, users submitted change requests. These were prioritized and included in the planning of the next releases. Sooner or later, they were implemented. Later, the change rate for more and more systems rose above 10 percent annually. Conventional change management soon reached its limits. The functionality of the software fell further and further behind. The pressure on IT increased more and more.

Now, in the course of Digitalization we have requirement change rates of over 20 percent and more. Conventional change management is completely overwhelmed. It is no longer possible for more and more applications to go through a lengthy testing process before they are released. They have to be released earlier, even if they have not been sufficiently tested. The remaining errors have to be removed during production. The damage caused by the errors is less than the damage caused by the delay in delivery. The main thing is that the delivered systems remain under the control of the product management. The errors and other defects in the system are all noted and monitored. Sooner or later they will be fixed, but they must never be the reason for not delivering a release. Continuous delivery is the priority.

Example for the use of Continuous Delivery

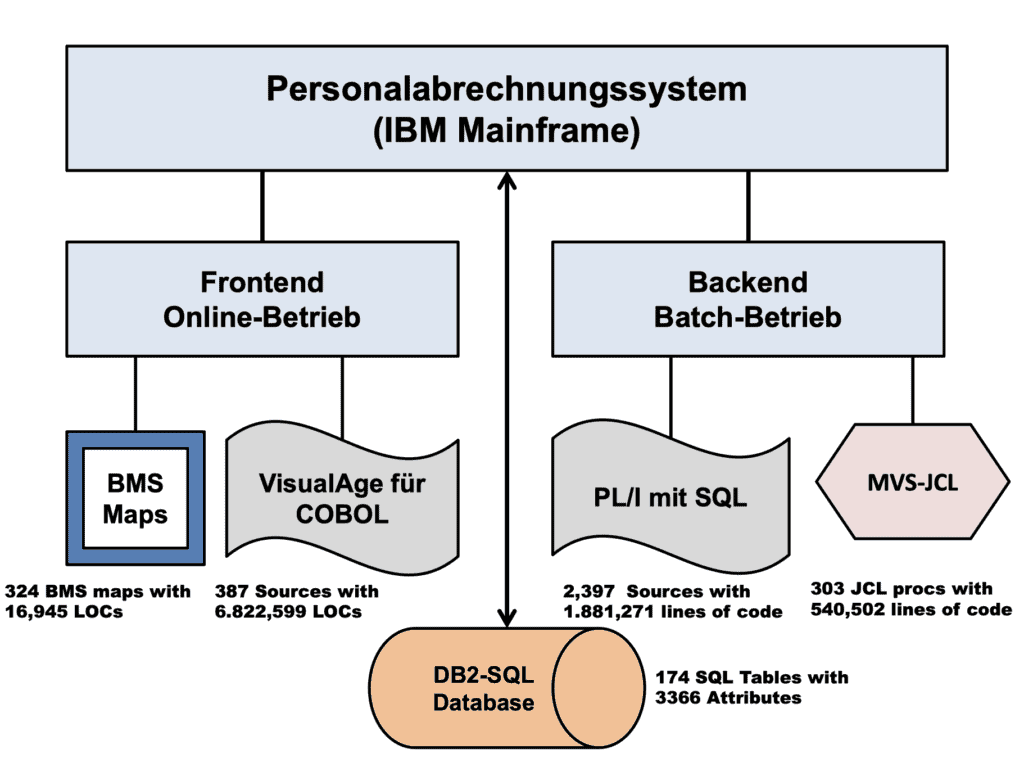

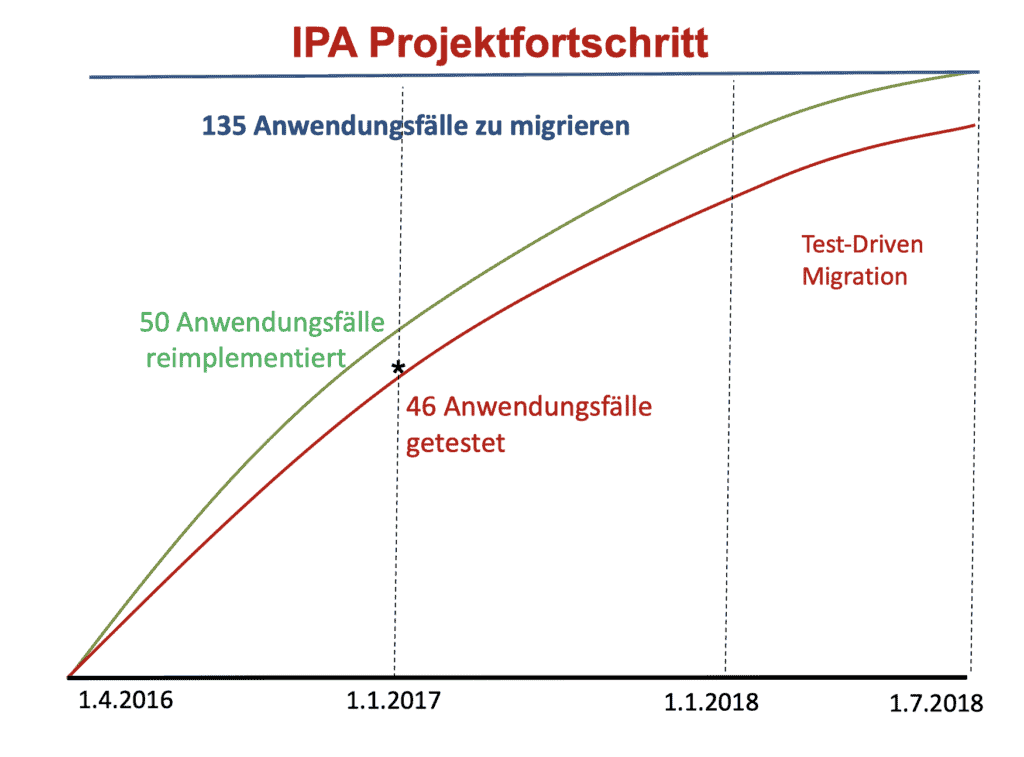

One application where Continuous Delivery is practiced is the payroll system of the states of Upper Austria and Burgenland. The clerks in the HR department there are also responsible for testing and delivery. The system with a VisualAge frontend and a PL/I backend is implemented piece by piece in Java. The old environment is the same as the test environment.

For the past year and a half, the frontend has been re-implemented component by component in Java. One component after the other is taken out of the old system and rewritten in Java. Then it is handed over to the responsible clerks, who compare the behavior of the new component with that of the old one.

The clerks test the new Java components alongside their daily work. If deviations occur, they are reported to the developers, who correct them immediately. Until that happens, the end users work with the old component. The current system is a mixture of new and old components.

By the beginning of 2017, a third of the use cases had been replaced in this way. By the end of 2018, all front-end components should be in Java. Until that happens, the VisualAge and Java components will run side by side in the same environment. The decisive factor is continuity of service.

Summary

The Digitalization of companies requires that their application systems are subject to permanent change. They must constantly evolve to keep pace with changes in the real world. Development, testing and delivery take place side by side. This requires other forms of organization. Not all applications lend themselves to a DevOps organization - but for more and more front office applications, "time to market" takes precedence over quality. For these applications, DevOps is the right solution.

Co-author: Harry Sneed. The article was published in the 01/2018 issue of OBJEKTspektrum.

- H. Sneed, R. Seidl, Software Evolution, dpunkt.verlag, 2013

- https://de.wikipedia.org/wiki/Digitalisierung

- N. Carr, The Big Switch - Cloud Computing and the Networking of the World, MitP Verlag, 2009

- J. Humble, Continuous Delivery - Reliable Software Releases through Build, Test and Deployment Automation, Addison-Wesley, Fowler Books, 2010.

- H. Sneed, R. Seidl, M. Baumgartner, Software in Zahlen, Hanser Verlag, 2011

- St. Kaps, Quality Assurance Measures in Continuous Delivery: Part 1: Activities in Support of ISO 25010, in: OBJEKTspektrum, 03/2015

- D. Ardagna et al, A Service-based Framework for Flexible Business Processes, in: IEEE Software, March 2011, p. 61

- M. Poppendieck, T. Poppendieck, Implementing Lean Software Development, AddisonWesley, 2006

- L. Crispin, J. Gregory, Agile Testing - A practical Guide for Testers and agile Teams, Addison-Wesley-Longman, 2009

- E. Wolff, Continuous Delivery - der pragmatische Einstieg, dpunkt.verlag, 2015

- L. Belady, M. Lehman, Laws of Software Evolution, in Software Evolution, Academic Press, 1985.