This article deals with the goals of object orientation and examines the extent to which these goals have really been achieved. The first protagonists of object technology set very high goals. Goals that can only be measured to a limited extent. They wanted to increase productivity many times over, reduce maintenance costs to almost zero, promote reuse, and ensure software portability. As quite a few empirical studies have demonstrated, these goals have only been partially achieved. Like all IT technologies, object technology has been "oversold." Nevertheless, it has also had many positive influences on software development, especially on reuse and portability-it was ultimately worthwhile to move in this direction. But we must not be satisfied with that now. The search for the Holy Grail of software development continues.

Object technology goals

If we are to form a judgment about the success of object technology, we must measure the technology against its own goals. According to the early protagonists of the movement, the most important arguments for the adoption of object-oriented methods and programming languages are. 1:

- Increase in productivity

- Reduction of maintenance costs

- Increase of reusability

- Improving portability

This article deals with these four goals and examines to what extent object technology - meaning OO analysis, OO design, OO programming, and OO testing - has come closer to achieving each of them. Has object orientation delivered what it promised? At the end, after examining each of these goals, we return to this question and attempt to provide an answer.

To increase productivity

According to Tom Love, founder of Productivity Products International Corporation, object orientation should bring greater productivity gains. He wrote in Datamation magazine in May 1987:

"Structured programming was only a small help. It provided only 10-15 % improvements of 10 to 15 times ... Object oriented programming stands ready to provide such needed improvements because it offers a radically different, more intuitive way of conceptualizing and building systems ..." 2 At the first OOPSLA conference in Portland, Oregon in 1986 more than 1000 supporters of the new OO development method appeared. All of them were firmly convinced that it would bring a revolution in software development. All the problems that developers had been struggling with for years would vanish into thin air. It will be possible to move effortlessly through from the analysis of requirements to the design of the architecture to the creation of the code. The old semantic barriers between levels of abstraction would disappear. Not only would the passage from requirements to code be much faster, the code produced would contain far fewer errors than before, and that meant less testing effort. Leading software technologists of the time were convinced of this 3.

Positive features

One of the strongest proponents of object technology was David Thomas of Carleton University in Canada. He was involved in the development of the Smalltalk language and was convinced that object orientation would bring a significant increase in software productivity. He wrote:

"We have been convinced since 1975 that the object-oriented approach to software development is the best of all known techniques. It will revolutionize programming." 4

By unifying the data with the procedures that process it, all the problems that arise from separating the data from the algorithms would disappear. Ergo, complex systems would be much easier to construct from the combined building blocks (Data + Algorithm). The developer also has more combination possibilities. According to Tom Love, the separation of data from procedures was one of the biggest productivity-inhibiting factors in previous software development. Eliminating it was a major accomplishment of object technology. The encapsulation of data and functions in independent objects allows these objects to be triggered and moved. The objects react only to messages from outside. They receive predefined orders and deliver agreed results. How the results come together remains hidden from the outside - information hiding. Thus, external procedures have no access to the encapsulated data and functions, but instead have a well-defined interface in which data exchange is regulated. This feature allows large systems to be assembled from many tiny building blocks. If a large part of the building blocks is prefabricated, a corresponding large part of the development effort is eliminated.

Another productivity-driving factor is inheritance. Both data and functions are programmed at a higher level of abstraction and passed down to lower levels where they can also be modified. This saves the developers of the lower-level code units the effort of programming this data and functions from scratch.

This redundant coding was another productivity-inhibiting factor in the previous structured software development. More than 50 percent of the code was redundant. Developers copied the code and changed only a few lines. With inheritance, they could now take the original code without having to move and change it. Rebecca Wirfs-Brock writes about this:

"You don't destroy the original code, you just extend it and build upon it... Inheritance makes it possible to define complex new objects without the bother of writing everything from scratch." 5

Added to these productivity-enhancing features of object-oriented programming is the flexibility provided by polymorphism. The coupling of modules in procedural systems was usually very rigid. It was statically determined which module called which other modules. There was even a special link run to bind modules tightly together to form a run unit. This meant that if the developer wanted a different combination of modules, he had to put together a different run unit.

There were dynamic calls in COBOL as well as in PL/I, but they were rarely used because the handling was cumbersome and opaque. You had to bind all potentially callable modules together to determine at runtime which module would actually be called next.

In the object-oriented languages, it is much easier to identify the desired method by its class at compile time. This makes the code much more flexible and allows more binding combinations. This feature is controversial, but it has its advantages: a code with deeply nested case statements can be simplified by using polymorphic calls.

Positive experiences

Has object technology actually led to an increase in productivity in development? This question cannot be answered unequivocally. There are both positive and negative reports.

On the positive side, graphical interfaces such as Rank Xerox's Multimedia Spreadsheet for the U.S. CIA and IBM's TPF2 timesharing system would not have been possible at all without object technology. Ann Hardy of Key Logic asserts:

"The TPF2 software has been written in an object oriented version of PL/I. We could not have built the system without it."

The same applies to Apple's Lisa and Macintosh. Both products would have been difficult to achieve without the new development technology. This statement underscores the fact that for some types of applications, object technology is without alternative. Object orientation is also indispensable in other application areas, such as telecommunications and multimedia. No one would think of doing it any other way, except in an even more advanced form of programming such as aspect-oriented programming.

But there are enough success stories in the classic data processing area as well, as far as productivity is concerned. By using C# in conjunction with a .Net framework, an automotive supplier was able to achieve a 200 percent increase in productivity, primarily through the use of pre-built building blocks. Similar productivity increases have been achieved in Java projects, for example at Sparkasseninformatik in the development of new booking systems or at Allianz Versicherung in the replacement of old collection systems.

These success stories speak for themselves. Object technology, when used correctly, can increase productivity and accelerate development. Critical voices have their say at the end of the article.

To reduce maintenance costs

Even more than increasing productivity, object technology had the reduction of maintenance costs as a goal 6. During the 1980s, the cost of maintaining existing systems continued to grow and by the end of the decade accounted for more than two-thirds of total costs: the cost of maintaining and enhancing old systems was twice as high as the cost of developing new systems.

It was claimed by the representatives of object technology that this was predominantly a consequence of procedural programming. The programs became too large and too complex. In addition, maintenance suffered from the poor quality of the code, which could only be understood with great difficulty. With the introduction of object orientation in design as well as in programming, everything was supposed to get better. Maintenance costs were to be reduced by half 7.

Maintenance cost drivers

Software has three dimensions - size, complexity and quality. The maintainability of a software system is determined by these three dimensions. The new object technology promised to reduce the size and complexity of software systems and to increase quality. As a result, maintenance costs should decrease.

The very assertion that maintenance effort depends on the characteristics of the software itself was false. Maintenance effort depends on a number of factors, including the maintenance environment, the skills of the maintenance staff, and the tools that the staff has available. Accordingly, changes in the software may have only a limited effect on maintenance costs 8.

Let's start with the size. Procedural systems were indeed too big, especially the building blocks were too big. The bigger the building blocks - modules and procedures - the harder it is to understand them. The main cause of the excessive size of procedural systems was the "copy and paste" technique. Programmers moved large sections of code from one module to another, changing only a few lines. This is how code used to be reused. However, changes would have to be incorporated in many places. Instead of creating new subroutines, developers extended the code in place, making it bigger and bigger. Using inheritance technology and creating small, reusable classes, object technology has been able to significantly reduce the amount of code, but at the cost of complexity.

Complexity is determined by the number of relationships between the code blocks. Object technology reduces the size of the individual building blocks, or procedures, but it creates more building blocks. Real OOSystems consist of a multiplicity of small classes, each with a limited number of data and functions. Alone the administration of the many source building blocks causes a large expenditure. But what weighs much more is the high number of relationships between the building blocks. Each inheritance, each association is a relationship, respectively another dependency. The more relationships between the system building blocks, the higher the system complexity. In addition the dependence on the other developers comes. The developer of a class, which inherits from another class and which uses another class, is dependent on the developer of that class. If that developer fails - for whatever reason - the dependent developer must take over his code. One of the authors has worked in a project where pure object orientation was abandoned for this reason 9. We can conclude that OOSystems have become smaller but more complex because of the many relationships. With the object technology the devil was driven out with the Beelzebub.

As far as quality is concerned, it is hard to prove an improvement. The many GoTo branches have disappeared, but this is not clearly a merit of the object technology, structured programming has achieved this. The cohesion of the modules, and/or the connection of the internal functions, grew, but likewise the coupling and/or the dependence of internal functions on external functions. One can claim that the modules have become smaller, but the number of dependencies has increased.

There are also high quality procedural systems. Quality is therefore not necessarily connected with the programming technique. It follows from the correct use of the respective technique, whether structured or object-oriented. Nothing is worse than the wrong use of the object technique, because it was not understood correctly.

Studies on the maintainability of object-oriented software

The claim by proponents of object technology that it would reduce maintenance costs by 30 to 50 percent has never been borne out, although many researchers have attempted to 10.

One of the first studies was conducted by Professor Norman Wilde of the University of West Florida back in 1993. For this purpose, he used a C++ system from the Belcore Corporation. 11. On the one hand, his team analyzed and measured the source code with tools, and on the other hand, they conducted interviews with the responsible maintenance personnel.

It turned out that some of the much-praised features of OO programming had negative consequences for maintenance, especially inheritance when it went beyond a certain depth 12. Another problem arose from the large number of interactions between methods in different classes. A third problem was caused by dynamic binding with polymorphism. The code gains flexibility as a result, but also becomes difficult to understand. The average developer cannot cope with this and makes wrong decisions when making code changes that lead to errors that are difficult to find.

Last but not least, Belcore had to correct more errors with the new object-oriented systems than before with the old procedural systems. On top of that, the performance of the systems dropped. The runtimes of the new C++ systems increased by 45 percent compared to the old C systems. The result of this study was that object orientation has some advantages, but equally serious disadvantages. Decisive for the maintainability of OO programs were the depth of the class hierarchy, the number of associations and the use of polymorphic bindings. That meant, the more object-oriented one worked the more highly the maintenance expenditures.

Another study on maintainability took place at the University of Wisconsin in 2007, this time with Java programs. A team of researchers under Professor Michael Eierman investigated the question of the superiority of object-oriented systems with respect to maintainability 13. For the purpose of comparison, they defined maintenance as a collective term for error corrections, functional enhancements, changes, and refactoring. Maintainability was defined as the minimization of the effort required to perform this activity.

It is claimed that the characteristics of object-oriented software are more likely to reduce maintenance effort than the characteristics of procedurally developed software. The researchers have formulated five hypotheses in this regard:

- It is easier to understand OO systems.

- It is easier to schedule OO maintenance interventions.

- It is faster to build up know-how about OO systems.

- Fault diagnosis is easier with OO systems.

- The scope of changes to OO systems is smaller.

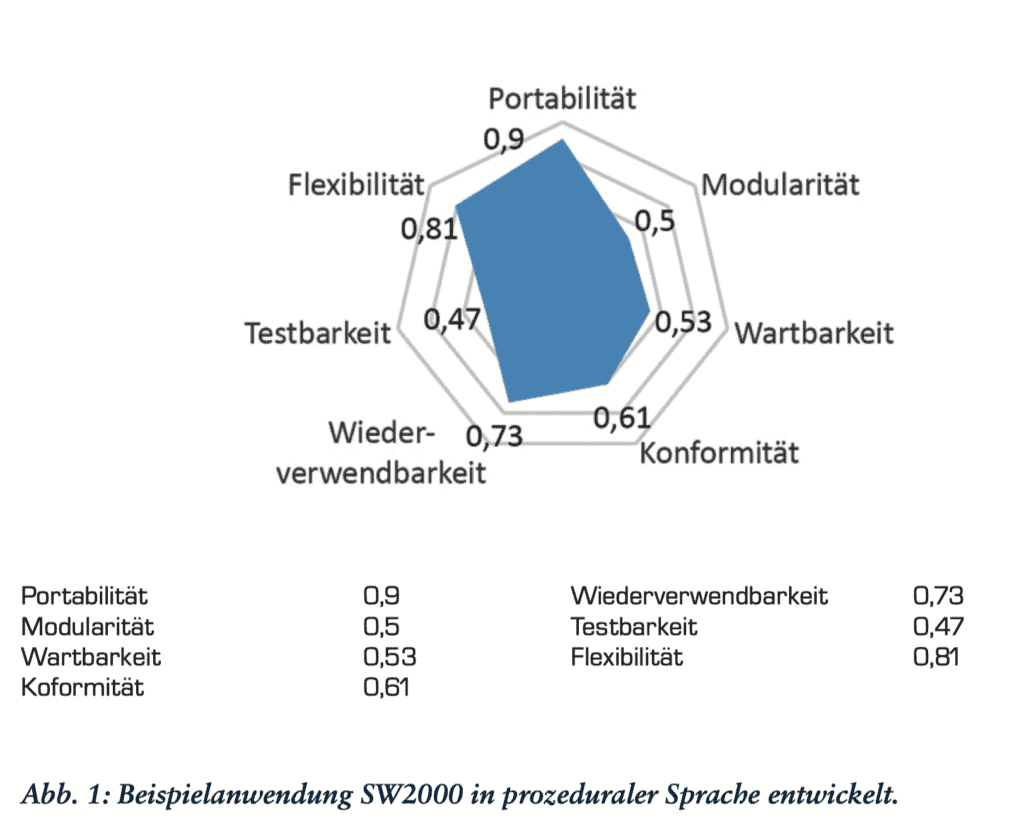

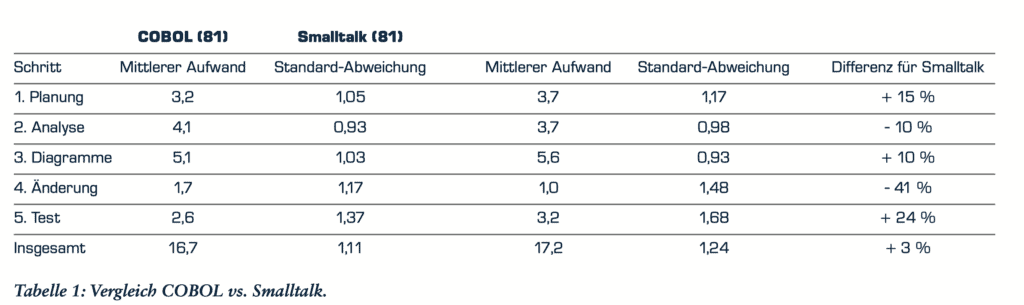

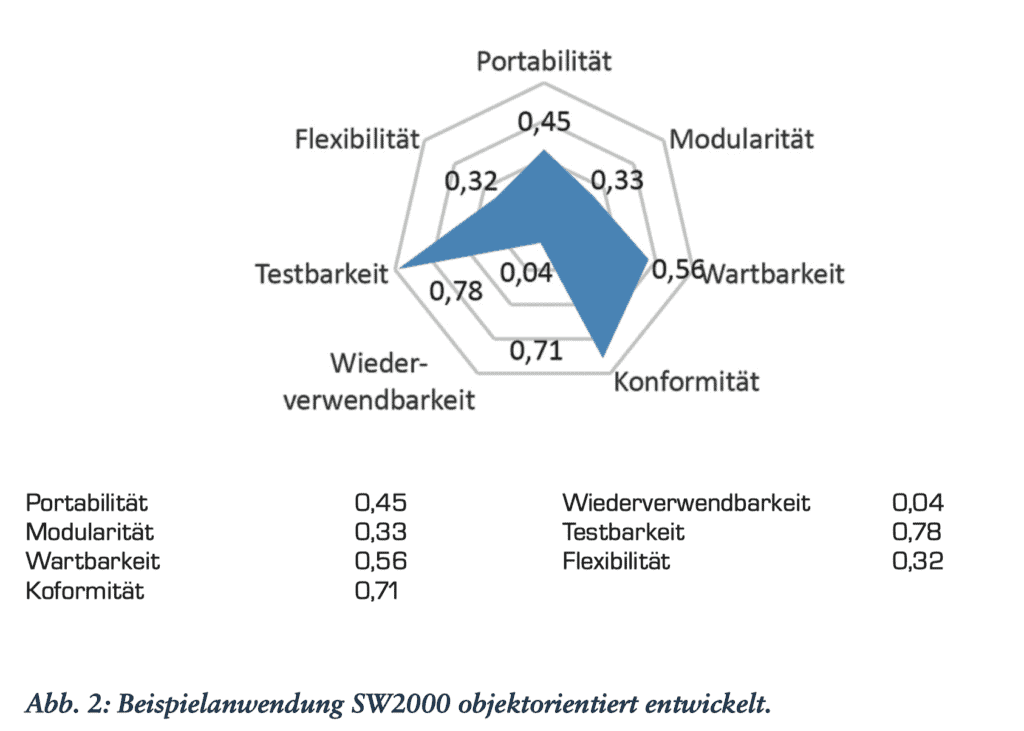

To determine whether these assumptions were really true, Professor Eierman involved 162 advanced undergraduates in his experiment. The students had learned both COBOL and Smalltalk. They were asked to correct and modify either a COBOL or a Java application. They were asked first to fix a bug and second to add an additional calculation to the code. 81 students chose Smalltalk and 81 chose COBOL. This produced the results summarized in Table 1.

Professor Eiermann's team concluded that there was no significant difference between the maintainability of the procedural and object-oriented solutions. What is gained by one activity is lost by the other. In the end, maintaining the Smalltalk solution actually cost 3 percent more. Eierman and Dishaw conclude that OO software is not easier to maintain, at least not according to the results of their study.

A comparison of the maintainability of COBOL and Java code was carried out by the author of this article himself at the Hagenberg University of Applied Sciences. There, students were given the same task - an order processing in COBOL as well as in Java - and were asked to correct an error and make a change in both versions.

The error correction was completed fastest in the COBOL code in all teams - no team needed more than one hour. The change, on the other hand, was faster in the Java code. It was easier to create an additional subclass in Java than to rewrite a new section in the COBOL code. No team needed more than two hours for the Java extension. The COBOL change took them significantly longer.

This may be because the students were more familiar with Java. But it also shows how difficult it is to make such comparisons. The subjects of such studies will always be more familiar with one technology than the others. Maintainability is difficult to measure because maintenance involves many different activities - error correction, modification, enhancement, optimization, and remediation. What goes easily in one programming language goes hard in another. In the end, we can only sum up that statements about maintainability are unverifiable. It may be that object orientation leads to a reduction in maintenance costs in the long run, but it is very difficult to prove this.

Testability of object-oriented software

Much of the cost of maintenance is due to testing, and the extra effort required to test object-oriented systems is indisputable. Robert Binder, internationally recognized testing expert and author of several books on testing, claims OO software is not only more difficult to test, it also causes more errors 14. Boris Beitzer says it costs four times more to test OO software than previous procedural software, and James Martin, the guru of the structured world, saw a huge wave of testing effort coming with the introduction of object technology 15.

First, heavy modularization leads to more intermodular dependencies. Methods in one class under test use methods in other classes, and these in turn use further methods in even more distant classes. One of the authors of this paper tried desperately to simulate all external references of C++ classes in order to test them. In the end, he had to give up because there was no way to code so many stubs. The same problem arises with JUnit. To test the one class, the tester has to include all the other affected classes in the test or he has to simulate them by "class flattening". Either way, the tester has more work than if the test object can be tested on its own.

Second, data-related classes are given methods that are procedurally independent of each other, but are intertwined via shared data attributes. Thus, one method can leave an object state that affects the behavior of its successor methods. The input area of a method includes not only the parameters that come from the outside, but also the internal attributes of the encapsulated object. Their state affects the test and must be predetermined by the tester. It is important to test not only all branches in all methods, but also all relevant object states. Third, when developing a class that can be used many times, it is not yet known for what purpose the method will be used. It had to be designed to serve any potential purpose. Such openness forces extensive testing to cover all possible uses. Reuse comes at a price.

As early as 1996, Capers Jones studied 600 object-oriented projects in 150 different application companies and came to the following conclusions. 16 :

- The number of errors from incorrect use of object technology was strikingly high.

- Errors in analysis and architecture have a much greater impact than errors in procedural analysis and design methods.

- It took more than twice as much effort to uncover the causes of the errors.

- The error density is higher because the code is more compact.

- Only where more than 50 percent of the code is reused does the error rate decrease.

It was to be expected that object technology would drive up the testing effort. The object-oriented code has many more dependencies and many more uses. To test them all, more effort must be expended. The answer followed in the form of test automation. To get a handle on the testing problem, users had no choice but to automate testing. This can also be interpreted positively. The high effort to test object-oriented software made test automation inevitable.

To increase reusability

In the matter of reuse of existing software, object technology has undeniable advantages. It has made it possible to build universally applicable class libraries that can be passed from project to project. There are several reports of projects where more than 50 percent of the estimated effort was saved by reusing code from previous projects. However, it is questionable whether the same savings could not have been achieved with procedural software. Long before the introduction of object technology, Capers Jones in the U.S. and Albert Endres in Germany reported success in reusing code modules. Generally usable Subroutinen, macros and Include and/or copy code lines were already used in the seventies, in order to save code. Endres describes also other reuse techniques, which go beyond the code - techniques such as design patterns, application frameworks and standard interfaces 17.

Therefore, it would not be correct to claim that reuse is an achievement of object orientation. There is also process and function reuse. Inheritance alone directly promotes the reuse of higher-level classes-and it is controversial among experts.

As far as reuse is concerned, object orientation has only contributed to a limited extent to increasing it. The concept of abstract data types has certainly contributed more. Whether we want to equate this with object orientation remains to be seen. In conclusion, we can state that object orientation drives reuse, but is not a sine qua non for it. Product Line Management proves that even non-object-oriented software is certainly reusable.

To improve portability

No one would deny that object orientation has led to better portability, at least as far as Java is concerned. The encapsulated objects with their standardized step locations can be easily moved from one environment to another. Unfortunately, it is not possible to move components without recompiling them, but the bytecode can be transferred. In any case the portability is much stronger than with the classical procedural languages, with which one must recompile and bind the modules again. 18. Today, software relocatability is a basic requirement for transferring local applications to the cloud. The strict division of code into classes, methods and interfaces makes it possible to reuse local classes as global web services. This is not readily possible with procedural languages such as COBOL, C and PL/I. One of the authors has spent years encapsulating such procedural programs for reuse as services. This requires the creation of completely new interfaces. This is much easier with object-oriented code.

Critical voices

But there are also critical voices about object orientation. Victor Basili, professor of computer science at the University of Maryland, asks whether

"Object-oriented is the right way to think in all domains. It is obviously good for some, but we still don't have enough empirical evidence to support the claim that it is good for all ..."

Erik Stenstrud, a professor at the Norwegian School of Management, conducted an experiment to compare productivity in object-oriented projects with that in procedurally-oriented projects. The result was inconclusive. In the end, productivity was about the same. The disadvantages of object orientation outweighed the advantages 19.

Professor Thomas Niemann of Portland Community College claims that overuse of all the features of object technology can be detrimental to productivity. He says, "excessive information hiding can be detrimental" and that inheritance and polymorphism make testing more difficult. If too much information is hidden, a stranger cannot correct the code. Ultimately, Niemann concludes that productivity is most likely to be achieved by an OOLite 20.

A critical voice in the matter of object technology comes from Professor Manfred Broy. He writes in an article for Informatik-Spektrum together with Johannes Siedersleben:

"We argue that while the object orientation in use today has a number of interesting, advantageous features, there are also some serious shortcomings that show that object orientation is not up to date with scientifically understood programming methodology and software engineering." 21

Furthermore, the authors claim: "Object orientation, despite its popularity and widespread use, by no means solves all old problems, and some new ones it has only created." Particular bellyaches are caused by inheritance and the use of object references in polymorphism, which are nothing more than makeshift pointers. In addition comes in the design language UML

- the lack of clear semantics

- the lack of integration of the different description techniques

- the missing component concept.

What Broy and Siedersleben criticize most are not so much the OO concepts themselves, but the implementation of these concepts in concrete languages such as C++, Java, and especially UML.

The lack of a component concept makes it difficult to design a complex architecture. Classes are far too small to serve as the highest level abstraction element. The authors state:

"One of the worst shortcomings of object orientation is the absence of components to complement the class. Classes are simply too small, too fine-granular. They are units of implementation, not construction. Large systems can hardly be structured with them."

Broy and Siedersleben do not mention any empirical studies to support their critique of object technology, but from their wording it is clear that object orientation causes no change in productivity. The positive, productivity-increasing influences are balanced by the negative productivity-inhibiting characteristics. In the end, it remains plus/minus zero.

One of the most interesting studies on object technology and productivity was published in 1994 in the Communications of the ACM under the title "Requirements Specification - Learning Object, Process and Data Methodologies". The authors are Iris Vessey and Sally Conger. 22. Both authors - a professor and a consultant - conducted a survey of how well average developers do with object orientation. Does it really match the natural way of thinking? Compared to other development methods, the OO method was the most difficult to learn for the people involved. So this approach does not correspond to the natural way of thinking at all 23.

From the productivity discussion it can be concluded that object orientation does not necessarily lead to higher productivity. It depends on who is involved in the project and how high the degree of reuse is. If experienced developers with a sound knowledge of the languages used, for example UML and Java, are at work and if more than 50 percent of the code is reused, a significant increase in productivity can be expected compared to procedural development.

However, if the project participants have little OO experience and the reuse rate is low, then a loss of productivity is more likely. Inexperienced developers and a high reuse rate indicate consistent productivity. Experienced developers and a low reuse rate indicate a small increase in productivity - 1 to 50 percent. Thus, the question of the influence of object technology cannot be answered unequivocally.

Back to the question of the benefits of object technology

In the end, when we ask ourselves what the object-oriented movement has brought to the software world, we have to subtract some of the original claims.

Has object orientation increased productivity?

In one case or another, software development effort dropped, especially where a high reuse rate was achieved and where experienced OO developers were at work. Where little reuse was achieved and where inexperienced personnel were employed, productivity fell. The proper application of object orientation places high demands on developers. If they are not up to it, their productivity is limited.

Compared to some 4th generation languages, Java and C++ perform poorly. Only C# in the context of .Net can keep up to some extent. When investigating productivity in a large Austrian industrial company, one of the authors found that

- productivity using a 4GL language for a system with over 32,000 function points was two function points per person day, while

- productivity for a Java application with over 12,000 function points was only 1.1 function points per person day.

So increasing productivity is not exactly a strong point of object technology, especially when inexperienced people are at work.

Has object orientation reduced maintenance costs?

There is no empirically supported evidence for this. The few studies that do exist point to a plus/minus zero in maintenance. In some cases, software maintainability has increased, especially in cases where the amount of code has been significantly reduced and where many standard building blocks have been used.

In other cases, the complexity of the software has increased to the point that maintenance personnel can no longer cope with it. Maintenance costs have increased. Without regular refactoring of complex OO systems in particular, they got out of control. With procedural systems, developers were able to keep them alive more poorly than not. This is not the case with object orientation.

Has object orientation encouraged reuse?

The answer to this question is conditionally yes. Object-oriented components are usually easier to detach from their environment and easier to encapsulate. This conclusion can be derived from the experience of one of the authors with reuse of classes as web services. On the other hand, there is too little real scientific evidence to support this claim. Software has been reused to a large extent even before object orientation. It is difficult to say how far object orientation has facilitated reuse. At best, one can claim: There is much to be said for it.

Has object orientation improved software portability?

This statement is the most true of all. Because the code has standardized interfaces, it can be moved into foreign environments and made to work with little customization. Developers don't have to rewrite the code. This is a key advantage in today's world of distributed processing. The concept of distributed objects fits well with today's diverse digital world. With portability, object technology has set important course.

Conclusion

In summary, it can be said that object orientation has by no means achieved everything that it originally promised - especially in terms of productivity and ease of maintenance. Instead, it has had other positive effects on software development. It promotes reuse and frees software from dependence on a proprietary environment. Object orientation has opened up the world of software - this alone has justified it.

Co-author: Harry Sneed. The article was published in the 01/2017 issue of OBJEKTspektrum.

- G. Booch, Object-oriented Analysis and Design, Addison-Wesley, 1994

- T. Love, Company Presentation, Productivity Products International, Sandy Hook, Conn., 1987

- J. Verity, The OOPS Revolution, in: DATAMATION, May 1987, p. 73

- D. Thomas, A Survey of Object-Oriented Programming Systems, in: Proc. of ACM OOPSLA Conference Tutorial, Carleton University, October, 1987.

- R. Wirfs-Brock, Object-oriented Frameworks, in: American Programmer, Vol. 4, No. 10, Oct. 1991, p. 21

- B. Meyer, Object-oriented Software Construction, Prentice-Hall International, 1988

- B. Stroustrup, What is object-oriented Programming?, in: IEEE Software Magazine, May, 1988, p. 10.

- H. Sneed, Software Maintenance, Rudolf Müller Verlag, 1988

- H. Sneed, M. Hasitschka, M. T. Teichmann, Software Produktmanagement, dpunkt.verlag, 2004

- I. Jacobson et al, Object-oriented Software Engineering, Addison-Wesley, 1994, p. 42

- N. Wilde, P. Matthews, R. Huitt, Maintaining Object-oriented Software, in: IEEE Software, Jan. 1993, p. 75

- I. Ryant, Why Inheritance means extra Trouble, in: Com. of the ACM, Vol. 40, No. 10, Oct., 1997, p. 118.

- M. Eierman, M. Dishaw, Comparison of object-oriented and third generation development languages, in: J. of Software Maintenance and Evolution, Vol. 19, No. 1, Jan. 2007, p. 33

- R. Binder, Testing Object-Oriented Systems, Addison-Wesley, 1999, p. 69

- M. Winter, H. Sneed, Testen objektorientierter Software, Hanser Verlag, 2001, p. 21

- C. Jones, Software Quality in 1996 - What works and what doesn't, in: Economics of Software Quality, John Wiley & Sons, 2011.

- A. Endres, Software Reuse - Goals, Ways and Experiences, in: Informatik-Spektrum, Vol. 11, Issue 2, 1988.

- E. Yourdon, Java and Java Tools, in: American Programmer, Vol. 10, No. 1, Jan. 1997, p. 2

- G. Goth, Has object-oriented Programming delivered?, in: IEEE Software Magazine, Sept., 2002, p. 104

- T. Niemann, Nut to OOP!, see: http://www.embedded.com/design/prototyping-and-development/4216597/Nuts-to-OOP

- M. Broy, J. Siedersleben, Object-Oriented Programming and Software Development - A Critical Assessment, in: InformatikSpektrum, Vol. 25, No. 1, Feb. 2002.

- I. Vessy, S. Conger, Requirements Specification: Learning Object, Process and Data Methodologies, in: Com. of the ACM, Vol. 37, No. 5, p. 102.

- R. Glass, The Naturalness of Object Orientation - Beating a Dead Horse?, in: IEEE Software Magazine, May, 2002, p. 104